| [Last] | [Contents] | [Next] |

We find fractals everywhere we look in nature. Fractals are patterns made up of smaller copies of themselves. A simple example is a fern. This picture of a fern was made (using the xfractint computer program) by just repeating the same pattern over and over again. Each of the branch structures on the fern are smaller copies of the big fern, and those are made of even smaller copies, and so on.

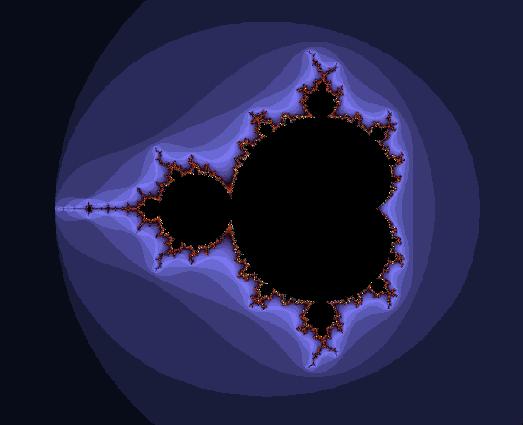

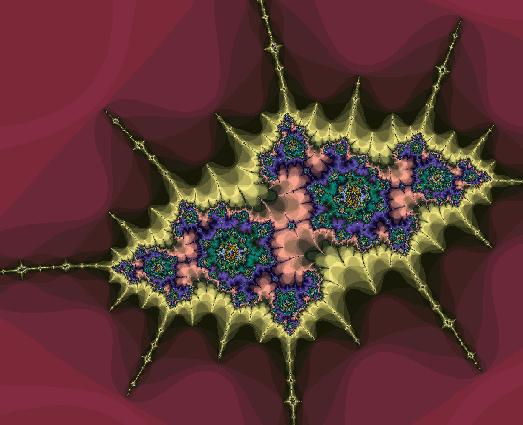

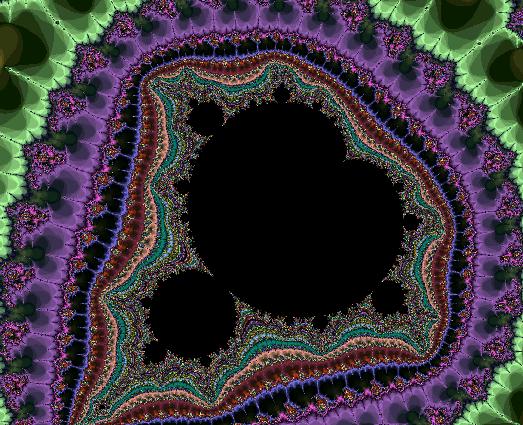

Sometimes the result of repeating a simple pattern over and over again is itself simple, as with the fern. But sometimes it can be very complex indeed, as well as staggeringly beautiful. This is the Mandelbrot Set, named after Benoit Mandelbrot who discovered it (and drawn in this case by the xaos program).

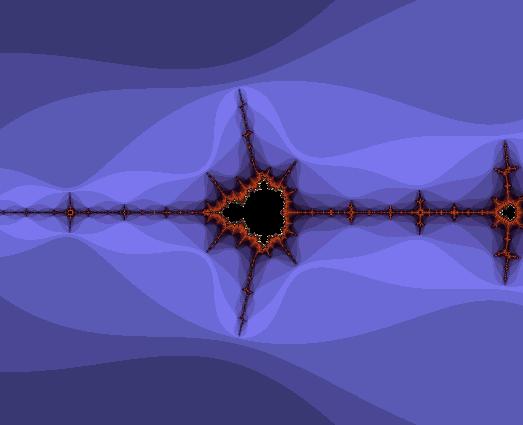

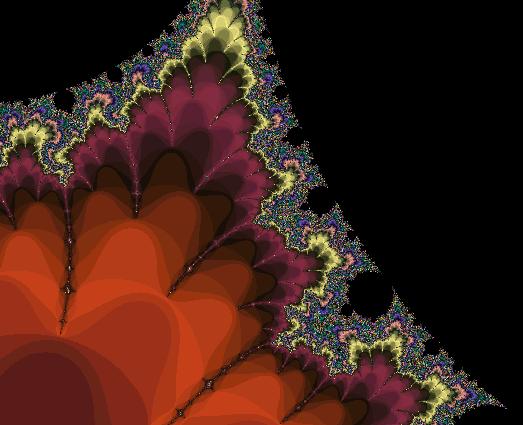

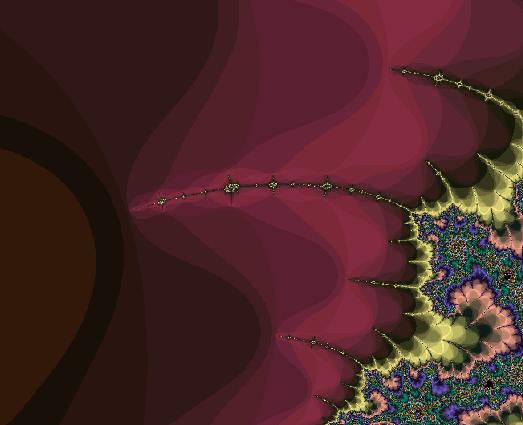

The Mandelbrot Set is made by selecting each point within the image, and applying a simple mathematical rule which moves to another point over and over again. For some starting points, the rule never takes the journey out of a well defined area. These points are all coloured black. For others, the journey quickly takes them a very long way from the start, and they never come back. The blue coloured points that make up the edges are like this. In between the black coloured and blue coloured regions there is an interesting border region, which is sometimes called "the edge of chaos". In this region, the number of hops that it takes before the journey shoots off, never to return, is very variable indeed. Depending on how long it does take, the points are coloured differently. There are three interesting things about this. Firstly, the journey time (colour) of two points that start very close together can be very different indeed. So there is no simple way to predict what colour any point will be just by looking at the points next to it. This is quite different to the black and blue regions, which are not "chaotic" in this way. Secondly, when we do look at the colours of points that are near to each other, we find amazing patterns that emerge out of the chaos. And the more finely we look at the points, the more we see this happening. Thirdly, within the emerging patterns that we see as we look in more and more detail, we find re-appearances of the overall pattern - just like the fern. The following sequence of images was generated by using xaos to zoom into the Mandelbrot Set in ever greater detail, and illustrate these three effects.

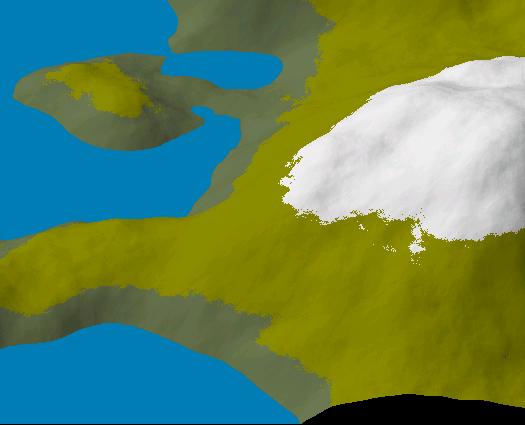

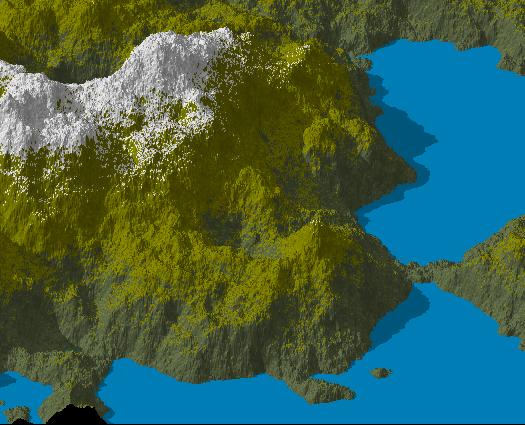

Because such complex results can be made from very simple rules repeated over and over again, it is often very hard to say exactly what the rule is when we find a fractal in nature, even though we can be pretty sure that a fractal is involved, because features of whatever we are looking at are very similar, no matter what scale we choose. So a rolling, gentle range of mountains will have rolling mountains within it, gentle hills and valleys making up the features of the mountains, and also gentle terrain at the scale a hill walker would be aware of when thinking about the next step or cup of tea. On the other hand, jaggy mountain ranges have jaggy mountains in them, with jaggy features and plenty of danger of sprained ankles from misplaced footing. The gentleness or jagginess of mountain ranges is similar, whatever scale we look at them. That's a very odd thing, because different geological processes form the shape of ranges of mountains and boulders. It's true though, and that is why computer programs that use fractal rules to make terrain are so useful to the motion picture industry. Here are a couple of simple fractal generated landscapes (drawn by the xmountains program), one gentle and the other jaggy.

The word "fractal" is shorthand for "fractional dimension", which refers to the way that things fill more space when they are crumpled up. In the same way that each landscape is equally gentle or jaggy in height no matter what scale we choose to look at it, coastlines are gentle or jaggy in the same way no matter what scale we use. Landscapes are fractals that have more than two dimensions but less than three (their fields and valleys), and where they meet the sea they have more than one dimension but less than two (the shoreline).

Nature is fractal at all scales. At the smallest scales studied in quantum mechanics, the sizes of the gaps between electron energy levels, the shapes of magnetic fields and particle pathways are fractal. At the largest scales the positions of the galaxies are known to be fractals across distances of at least 500 million light-years. In between these widest scales, we find fractals in the oddest places. The pattern of intervals between drips of a leaky tap are fractal, and even the intervals between human heartbeats (which we thought were regular for hundreds of years) turn out to be fractal once we measure carefully enough and know what to look for. Even the movements of stock market share prices are fractals. The imaginary "mountain range" drawn by the graph of price movements over time has a similar jagginess at all scales, just like the Alps or the Rockies.

Nature is full of fractals. Sometimes with fractals like the shape of the fern, it's easy to see where the fractal came from. The plant would only have to keep the simple rule once in its genes and use it over and over again. Doing that would actually be much simpler than holding the whole description of an entire plant in its genes. But a dripping tap doesn't have any genes, boulders are formed by different processes than mountain ranges, and bays are formed by different processes than continents. Across the cosmos, galaxies that would need a thousand million years just to say "Hi!" to each other are arranged in fractal patterns.

Can there really be a different underlying cause for all the fractals found in nature, or would we now be better off revising what we thought we knew about the fundamental processes of nature, accepting that fractal structure is a fact of life in the universe, and trying to understand what we see in those terms? Are we better off trying to fit fractals into the ideas we had before we discovered them, or should we rebuild our thinking, take the fractals as basic, and work from there? It would certainly mean a lot of work, in a direction that our scientific and technological culture has hardly started to explore. After all, there are plenty of things that we understand that aren't fractal, such as the simple and regular rules that let us predict the path of a football, which we throw into the air and watch as it falls to the ground. Should we - indeed can we - try to fit these simple and regular rules into a fractal pattern, so that we have a single fractal way to understand everything? Astonishingly, the answer to both questions is yes! In fact, the football falling to the ground has already been done (in a way that will become very important in Chapter 4), although even most people who study physics aren't yet aware of it.

These days it is fashionable to believe that at very small scales, where quantum mechanics apply, the universe and even reality itself is a strange and alien place quite different from our normal, day to day understanding of how things behave. Therefore (so the story goes) we should cease to believe that there even is such a thing as reality, because everything we see is made out of stuff that doesn't recognise reality as we know it at all. We'll look at where this unhelpful fashion came from in Chapter 3, and it's a tale that's very useful for understanding how human beings get silly ideas stuck in their heads. For now we'll just mention the way to get back to a single reality which is the same for atoms and people, and has been available for over forty years.

The way people do quantum mechanics in order to design the parts for things like CD players was sorted out by the American physicist Richard Feynman (who we'll be meeting again later). To make calculations in quantum mechanics, Feynman had to find a way to take into account every possible way that a particle could get from one place to another. A straight line journey between place A and place B is one possible way. Going from A to C and then to B is another. And going from A to C to D, back to C, back to D, and then to B is yet another! There are an infinite number of ways that every particle can get from one place to another, and until Feynman got the sums under control by noticing that some routes are much more important than others and found a way to sort them out, the infinite number of things to think about had stopped people doing useful stuff with quantum mechanics. All the complicated, hoppy ways that a particle can get from A to B can't be thought of as a smooth and regular curve at all. They are a fractal. And Feynman's way of dealing with the fractal, hoppy paths involved a tricky bit of mathematics he called a "path integral". (We don't need to worry about what a path integral is here, we just need to know that Feynman invented it to deal with fractal particle paths, and so made quantum mechanics useful.)

As soon as Feynman had sorted out path integrals for very tiny particles, he realised that the same way of doing things could be applied to big things like footballs, where they ended up saying the same thing as the "principle of least action". This has been known about since the middle of the 18th Century, although the roots of the idea date from ancient times. It's another way to calculate the path taken by a flying football, that produces the same result as Newton's way, but does people's heads in (we'll see why later). So Feynman discovered that his path integrals (which describe fractal particle paths) apply equally to tiny sub-atomic particles, to beams of light (which are also handled by quantum mechanics), and also to footballs. It's just that with footballs we don't need to do things the tricky path integral way, because the answer ends up in a much simpler relationship that was discovered by Newton. So if we want to build a bridge, or a moon rocket, we might as well do things Newton's way and be finished in time for supper. But if we are asking the deep questions, we must always remember that the rule that works with the fractal paths of the tiny particles works with the bridges and moon rockets and footballs too. Although the fractal rule is more complicated, it explains much, much more, so if we are going to explain the way the universe works, it's the fractal picture that is the correct one.

So the evidence to date is that we can rethink our understanding, and see the world as a richer, multi-layered thing, filled with fractals, that just happens to look smooth in certain places, at certain times. If we accept this and get on with it, we'll learn more and become more powerful.

Fractal patterns are spread across different kinds of canvases. Mountain ranges, coastlines and the positions of galaxies are fractals in space. Heartbeats and dripping taps are fractals in time. Electron energy levels are fractals in a context that might not have any equivalent in our day to day experience. And the stock market movements are fractals in a very odd context indeed.

Some of the fractals we see, even if they are found in contexts quite removed from our day to day experience, have a straightforward basis in physical realities. We might not be able to understand what separates one atomic energy level from another one, but the universe certainly does. Every time an atom gives out light of one colour rather than another, an electron has shifted from one energy level to another. A fundamental physical event has occurred. Not all fractals are like this. The stock market movements for example, have no simple physical event behind them. Of course stock market movements ultimately come from physical things, but there are an awful lot of them, and they add together in very complicated ways. What influences whether the price of cotton goes up rather than down? The amount of sunlight and rain falling on cotton crops across the world for sure - there must not be too much or too little of either for the cotton plants to thrive and produce a bumper harvest. When cotton is plentiful the price goes down, and when it is scarce the price goes up. Then there are the relative strengths of the national currencies of the principal cotton exporters and importers. The state of labour relations in dockyards. The current level of hysteria (and drug taking) amongst stock market speculators. The strength of currencies is influenced by the fortunes of politicians (including unexpected events like sexual misconduct being discovered by newspapers). Labour relations are influenced by many factors including the local climate (people are less likely to strike in winter), the success of local sports teams (the boss is less annoying if everyone is on an up from last weekend's football match) and the pay settlements of workers in other industries. And so on. There is no single cause that adds up, over decades, to produce a fractal pattern in the price of cotton. And many of the factors that influence the price aren't physical at all. They are pure ideas. What some people think other people think about something that might not even happen at all!

In other words, some fractals (such as stock market movements) are found in the realms of abstract concepts - even abstract concepts cooked up by humans operating a particular economic system in a particular political system in a particular era. We usually think of boulders and taps as more concrete - more "real" - than notions like "consumer confidence" or the fickle fashions of recreational drugs on Wall Street. This is so even though the people who study matter at the smallest scales have a very hard time telling us exactly what any physical object that we can see actually is. An understanding of quantum mechanics actually makes it much harder to tell where the edges of a boulder are, while a two year old child does not have this problem! We have an inevitable bias towards thinking of things that we can kick as realler than things we can't kick, but the universe does not see it that way. As far as the universe is concerned, anything that can have an argument made in favour of it's existence does exist, and if it exists, it's a candidate for arranging in fractal patterns, no matter what kind of abstract "space" it exists in. As far as the universe is concerned, all spaces are equal, no matter how "real" or "abstract" this particular species of hairless monkeys chooses to think of them.

So very quickly, as soon as we start to think about things in this kind of way, we come to the idea that within the universe, anything that we can see is as real as anything else. That when we are small we can see only the most superficial, physical kind of real things, and as we get older we learn to see other, to us more abstract, real things. As a species we have no reason to think we have found all the layers of abstraction where real things are found. More likely, we haven't even started. And the things that happen in the universe, the behaviour of the most superficial boulders and dripping taps, happen in accordance with the real things going on across all the layers of abstraction, whether we have discovered them yet or not. All the layers of abstraction are doing business with each other, all the time. It's chaos - but within the chaos we find order emerging. Within the layers of abstraction we find relationships that are echoed in many other situations. The same "exponential" relationship describes the growth of a population of rabbits in a field, and the increase of excited neutrons flying around inside an exploding atom bomb. How much reality can we see? As much as we can learn to see. And because of the way the patterns of patterns emerge, each layer of learning equips us to see more, without our brains exploding from the complexity of it all, because as we learn to see more deeply we also learn to see more generally. We can use the deeper ideas in lots of different situations. As we'll see later, this core idea is something most human cultures have never really come to terms with. How many times do we meet people who think that learning is about rote memorising unstructured facts, rather than becoming familiar with a richer space? Yet this idea, that deeper ideas enable us to handle much more of the world, is the only reason why we attempt to do science. We are looking for the better way to see, the deeper and simpler way to understand the superficial complexities that are all around us. When we do science, we want to understand the hidden layers that are moving the boulders and the dripping taps around.

When we find an aspect of reality that is governed by hidden fractal patterns, we can use this fact to "run the fractal" in either of two ways. For example, forests are arranged in fractal patterns. We can use this fact to compress digital pictures of forests and make them much more efficient to transmit over the Internet. To do this we have to process the image and find the simple rules that have to be repeated over and over again to produce the picture. This is "running the fractal" in the compression direction. We then transmit the rules instead of the original image. At the other end, another computer just applies the rules over and over again, and recreates the original picture. This is "running the fractal" in the generation direction.

Fractal image compression is very, very powerful. The new JPEG2000 standard uses fractal compression and can reduce the amount of data required to display an image one thousand fold. It makes sense to use fractal compression in a standard for transmitting all sorts of images, because everything we might photograph (that is - everything) is arranged in fractal patterns. The only problem with fractal compression is that it takes much more processing to run in the compression direction and find the rules, than it does to run in the generation direction and apply the rules. This is because applying the rules involves the computer doing a well defined and simple task, many times. It's the kind of thing that even small processors can easily do. Finding the rules isn't like this. There are no rules for finding the rules for making an image. The only thing we can do is try lots and lots of different rules, and concentrate on tricks that help us guess more quickly.

Why are there no rules for guessing the rules? Is it that we just haven't discovered them yet? Probably not. There probably are no rules at all. To understand why this is so, we have to take a journey (in our minds) around the entire universe!

Start by thinking of an aerial photo of part of a forest, taken with a reasonably good camera. If we put it through image compression, we get a much smaller chunk of data that can be reconstructed to produce the original. This means the original data contained a lot of redundancy. There was a lot of stuff in there that didn't need to be there to store the data. There was a lot of repetition that could be removed. Now think of a picture of the whole forest and the surrounding terrain, taken by the magnificent optics on the Hubble Space Telescope. This photograph can show all the trees in the original, to the same level of detail, but it also shows a whole lot more. If we put this photo through image compression, we can achieve much more compression than we could with the first picture. In part this is because the rules that worked for the smaller picture can also be used for parts of the bigger picture too, so they don't need to be included again. We can use the foresty rules to describe parts of the forest that weren't included in the first photo. But it is also because when we make the picture bigger we get a chance to find deeper rules that apply to the forest as well as the lakes and cities around it. Perhaps the edges of all these regions can be described by fractal rules, so we end up with simple rules for making regions, and then a few more simple rules for filling them in, in different ways - water, buildings, trees. The bigger the picture, the deeper (more powerful) the rules can be - so long as we have the processing power to find them. The more input data we have, the more redundancy we can get rid of.

Now imagine doing image compression of the whole universe, which is filled with fractals all over the place. If the trends we've seen to date carry on (and we know the galaxies are arranged in fractal patterns so there's no reason to think they don't), we could get better compression for a picture of the whole universe than for any other picture. When we do image compression of the whole universe, we get something with no wasted data in it. Every bit of the compressed image is needed. Nothing in it is a copy of anything else. So we can't transform any part of the compressed picture of the whole universe into any other part by just applying simple rules to it. If we could, that would be redundancy that we could get rid of, and the picture would not yet be compressed!

To complete the trip around the universe, just run the fractal in the generation direction, and recreate the original again. You end up with something that is filled up with fractal patterns, some of which come from very, very deep rules indeed. Yet each rule is a unique thing in the compressed form. Wander around in the generated version (the universe of superficial things that you can kick) and you'll find yourself in the middle of a huge collection of fractal patterns that you cannot find by just applying simple rules to patterns that you have already found. What's more, because of the way order emerges from chaos, and similar patterns appear even where there are no exact repetitions (look at the examples from the Mandelbrot Set at the start of this chapter), you'll be able to find new patterns in an efficient way by guessing and testing. Which is exactly what we see.

So the universe of boulders and dripping taps is filled with patterns, which are themselves arranged into patterns, which are themselves arranged into patterns, and all the patterns fit together, in all the ways that we have discovered, and (we can be sure) many, many other ways that we have not discovered yet. This is because all the patterns that we can find are unique parts of a single universal master pattern, which contains no redundancy. Therefore we can't find the patterns within the single universal master pattern by following simple rules. To find the patterns we have to guess and test to see if we are right. Our guesses can get better the more parts of the universal master pattern we already familiar with. The more parts of the pattern we guess, the more we understand the secret world of boulders and dripping taps and mountains and heartbeats and sub-atomic particles and galaxies. And the more we understand, the more powerful we become because we get more chances to manipulate those objects to our own ends. We still don't know how things got to be this way, but it's a physical fact that our culture exploits (without really thinking about it) in many, many ways every day. We'll look at how things got to be this way in Chapter 4.

Because we can "run the fractals" in two ways (generation and compression), it follows that we can do two kinds of thinking. One way follows rules, and is pretty easy to do. The other way uses guesses based on experience and is harder to learn. People who do science sometimes call the rule following way "deductive thinking" and the guessing way "inductive thinking". All the good stuff in science comes from people who have done inductive thinking, for the simple reason that deductive thinking can never discover new things. All it can do is rearrange stuff that we already know. That does not mean that deductive thinking is unimportant though, because we would never have got anywhere in science without it. It's deductive thinking that lets us grind through what we already know, applying it is useful ways and finding things that we haven't yet understood while we are at it. Deductive thinking also lets us test the guesses that come from inductive thinking - which can sometimes be wrong and are no use at all until they have been tested. That's why really effective people are usually good at doing both kinds of thinking at once. When we do both kinds at once, we can find the holes in our existing understanding, cook up guesses to fill in the holes, and test the guesses in a unified kind of fluid thinking that cannot be put into words until it is done and the results are available.

People who don't use scientific language also talk about deductive and inductive thinking, but they use different words. Deductive thinking is often just called "thinking", and inductive thinking is called "intuition". This is unfortunate because it suggests that we are only thinking if we are doing stuff with "therefores" in it, and intuition isn't thinking. But it is.

Whatever kind of thinking we do, we need some kind of hardware to do it with. If we can do a kind of thinking, we have evolved a part of our bodies to do it. Here we can take an example from computer design. Modern digital computers are very good at doing deductive operations (let's not call it thinking, because computers aren't conscious yet and it's not realistic to claim that an unconscious machine can think, however powerful it is). They are not very good at doing inductive operations, which is why it takes so much more processing power to do image compression than it does to reconstruct the image. On the other hand, there are alternatives to digital computers that are much better at doing inductive operations. One example is neural networks, which hold their inputs in a mess of electronic relationships that their designers are not usually even interested at looking at, and which are amazingly good at correctly identifying faces from different angles, under different lighting conditions and so on. Another example is soap bubbles! We don't usually think of soap bubbles as computers, but there are minimum energy problems that can be solved by soap bubbles in the blink of an eye (so long as we can find a way to describe the problem as a wire frame for the soap bubble to form over), which would take digital computers many hours to solve - and even then the digital computers sometimes get the wrong answer!

So it is reasonable to assume that because humans can do both kinds of thinking, we have two kinds of thinking hardware to do it with. Where this gets interesting is when we ask what kind of thinking is most useful to an animal living wild, in a universe that is full of fractals. If the animal is going to know where to stand to improve its chances of finding food and avoiding danger, the ability to spot the fractal patterns and make guesses is going to be much more important than the ability to do deductive logic. So we would expect inductive thinking to be evolved and developed first, and deductive thinking to come later - if at all. This fits with the way that although we have seen some animals such as chimpanzees and dolphins doing deductive thinking, it isn't that common in the animal kingdom. Yet every animal is good at finding food. We can't just dismiss this ability of animals to find food as coming from instincts that they are executing like computers running programs, because programs that are executed in a "dead reckoning" kind of a way tend to be brittle, and fail if conditions change only slightly. Real animals live in habitats that are always changing, and they must always be adapting. The critters are doing some kind of thinking, and it's inductive, guessing type thinking, moving towards things that are important to them.

We humans weren't always so smart. We are evolved from creatures that could do inductive thinking but not deductive thinking, and had much smaller brains than we have. So perhaps the brain is not the best place to look for the hardware that does inductive thinking. Something older - much older - would be a much better candidate. Of course, this is something that we all know already. When a person has a strong intuition, we call it a "gut feeling". The results of inductive thinking have to be presented as "feelings" because they have to be as useful to cats and dogs and lions as they are to creatures with big deduction capable brains. A head up display like the one that Robocop has wouldn't be much use to a cat, but a "gut feeling" is. And why not put the processing hardware in or near the gut? After all, everything with a gut has to feed it! And far from dismissing "gut feelings" as an idea without any basis, in recent years science has learned there is something called the "enteric nervous system", surrounding the gut and in nerve ganglia near to it, which contains as many nerve cells as the spinal cord, shares the same neurotransmitters that the brain uses, and exchanges a lot of data with the central nervous system. The enteric nervous system can even function without the brain - when nerve trucks that connect the two are cut, gut control functions that are normally performed by the brain can be taken over by the enteric nervous system.

So humans do two very different kinds of thinking, which require two different kinds of hardware. For creatures that live in the wild, the kind that is commonly called "intuition" is essential, but the kind that is commonly called "thinking" is not. The thinking hardware is in the big, new bits of the human brain, but the brain might not contain all the intuition hardware (because few animals have a brain anything like as big as a humans), and modern discoveries confirm the common intuition that the gut does a lot of data processing. Deductive and inductive thinking really are two very different things, as different as running the fractals in the generating or compressing directions.

Some cultures are more comfortable with inductive thinking than others. The people who belong to those cultures are more familiar with the layers of relationships between objects that we can kick, and have evolved languages that enable them to talk about these more complex spaces, and the things that are there, waiting to be seen, once we learn to look. This is an area that has caused a lot of confusion and miscommunication, which we can now begin to sort out.

A good place to start is a person who possesses both kinds of culture. One of the reviewers of an early draft of this book was raised by his own Cree people on a Native American reservation until the age of ten years. He was then kidnapped by the Canadian government and "fostered" by white Canadians until the age of eighteen years. Although this experience was profoundly unpleasant, he ended up with an ability to function within both cultures, although this did not extend to being able to translate between peoples with profoundly different ideas. After he had reviewed the early draft, he commented that the ideas were broadly in accord with the Cree understanding of reality. This was astonishing. If they possessed this advance, why had the Cree or other Native Americans not been able to communicate it to people who did not share their culture? He said:

"It's the words. Words made of twenty-six little letters, and none of them mean anything. It takes years to learn Cree, because every word is a legend, and in order to learn the words, you have to learn the legends. And all the legends are of a particular kind. They are all about situations where a man wants to go in a direction nature doesn't seem to want to go."

The idea of a universe filled with patterns of patterns, none of which can be described in terms of any other, easily makes sense of this statement. The Cree language is based on relationships. The legends describe and identify relationships that are of interest to the people, and tags them with words. To illustrate this point, we can invent such a legend of our own:

One day, Tack the sailor wanted to travel across the lake from West to East, but the wind was blowing from East to West. The wind was blowing in the wrong direction and it seemed that it could not blow Tack where he wanted to go. But Tack looked, and saw that he could make the wind press against the water in the lake by fitting a board under his boat as well as a sail above it. Then his boat would be squeezed between the wind and the water. He could make the boat go a little way East and a long way North. When he didn't want to go North any more, he could change round and make the boat go a little way East and a long way South. And he could keep doing this until he reached the East shore of the lake. Since that day, sailing in the opposite direction to the wind has been called "tacking".

That's exactly the way that we define verbs of course. We have to describe the action that is meant, just like the Cree. Nouns like "cup" or "flagon" or "bucket" are easier. We just point to an example of the category of superficially kickable object that they mean. The difference between the two kinds of language is that Native American languages are based on verbs (which need legends to define them) while most languages are based on nouns (which are defined by pointing to examples). Look at any children's reading primer. You will see lots of pictures of objects with their names written under them. The possible relationships between the objects, and how they can act on each other, are not featured in reading primers. This difference is very important. In the Hopi language for example, there are no nouns at all! It's all done with verbs. There are no words for "cup", "flagon" or "bucket". Instead Hopi speakers have to say things like "for sipping water", "for gulping water", or "for filling bathtub". This might seem to be less efficient for dealing with kickable objects, but in the fractal universe of layers of patterns, it is more efficient. The whole universe is moved around by patterns of patterns, with the kickable objects and what they do just being the end results of the action of the patterns of patterns. Native American languages evolved for dealing with this reality, and can be used to describe a universe of layered mutual actions where the processes are more important than the objects.

From here we can make sense of an idea that puzzles many people who study languages, called the Sapir-Whorf hypothesis. After studying Native American languages, Sapir and Whorf came out with one of the oddest suggestions in history. It's not the idea itself that's odd - it's the way that people aren't very clear about what it was that they actually suggested! Just getting near the idea seems to make people go cross-eyed in their brains! Sapir and Whorf's idea is usually taken as saying that the thoughts people can have are limited by the language that they speak. At its silliest, this is taken to mean that people can't think thoughts that they don't have a word for, which is obviously ridiculous because if that was true, there would never be any new words. Without the word there couldn't be the thought, but without the thought there would be no need for the word! Despite this problem, that's what an awful lot of people take Sapir and Whorf as meaning, even though it's silly. So what were Sapir and Whorf trying to say? Perhaps their idea was that cultures that have languages based on nouns emphasise the superficial physical objects that any two year old can see, and in consequence make the "abstract" world of hidden relationships that we must learn to see as we get older, harder to understand. On the other hand, cultures whose very languages drag the mind away from objects that two year olds can see and into the deeper world of relationships, encourage the growth of their members' awareness from the moment they start to talk. By the time people have become adults, the one will understand the world as being filled with static objects, the other will see a world of perpetually moving relationships - and will be closer to the truth.

It's interesting to see what we can learn by talking to Native Americans, but if we don't happen to be Native Americans ourselves, the benefits are likely to be pretty limited. Fortunately, once we have got these ideas in play, we can easily find a couple of wonderful examples, in our own technological culture. Both of them involve the American genius, Richard Feynman. The word "genius" is much abused. These days it tends to be applied to anyone who can even boot a home computer. This is not what scientists mean when they call a person a genius, which is not something they do very often. There are thousands of creative and able people in the world, who do good, useful and original work all their lives, but who are not thought of as geniuses. To be called a genius a person has to have some kind of special relationship with nature. They have to pull new ideas, that no-one has ever thought of before, out of thin air. It's almost like they can see a crib sheet that no-one else can see. Feynman was such a person, and he spent a lot of his time trying to explain to people how it was done. He liked to do this by telling stories. Feynman legends. One of them went something like this (depending on the version):

One day I was walking through the woods with my father, and he pointed to a bird that was sitting in a tree. "That's a Spencer's Warbler", he said, and then he told me the name of the bird in many other languages. Then he said, "There. I've told you the name of the bird in many different languages, but I still haven't told you anything about the bird. To know something about the bird, you have to look at what it is doing. Then you will know something about the bird."

That's a nice little cautionary tale, but it's the second example that stops more people in their tracks. It involves Feynman and the original genius of physics, Isaac Newton. Newton discovered modern mechanics, and mainly recorded his discoveries in Latin prose, not in the mathematical style we use today. The modern mathematical style was invented by the English Victorian Oliver Heavyside, and what we usually refer to as `Newtonian' physics is nearly always the Heavyside rendition of Newton's ideas. In the 1960s Feynman attempted to throw away the customary way of teaching physics that had grown up higgledy piggledy over the centuries, and instead teach what he knew as elegantly as he could. The lectures were published as The Feynman Lectures on Physics, which are often just called the Red Books by people who find them fascinating, informing and thought provoking.

Things get interesting when we compare the ordering of the tables of contents in Newton's book Principia with the parts of the Red Books that were known to Newton, and the parts of Advanced Level Physics by Nelkon and Parker (the standard British textbook), again that were known to Newton.

Principia

Newton's Three Laws of Motion

Orbits in gravitation (with raising and lowering things)

Motion in resistive media

Hydrostatics

Pendulums

Motions through fluids

Red Books

Energy

Time and distance

Gravitation

Motion

Newton's Three Laws

Raising and lowering things

Pendulums

Hydrostatics and flow

Advanced Level Physics

Newton's Three Laws

Pendulums

Hydrostatics

Gravitation

Energy

What seems to be distinctive about Advanced Level Physics is that it builds up the complexity of the equations of Heavyside's system, while the two other books are motivated by different intents. Newton starts with his Three Laws, while Feynman gets energy into the picture really early and leaves the Three Laws until later. But once they have defined some terms to work with, both geniuses start by telling us of a universe where everything is always in motion about everything else, and then fill in that picture. They do this long before they discuss pendulums, which are described by easier equations, but are a special case compared to the unfettered planets in their orbits. Advanced Level Physics puts pendulums before gravitation, and deals with the hydrostatic stuff (things to do with columns of water) that both geniuses leave until very late, before it even mentions gravitation. By that time, the student has learned to perform calculations in exams as efficiently as possible, but has built a mental model of a universe of stationary things with oddities moving relative to them.

Algebraically inconvenient though it may be (and while Newton's prose might not be influenced by algebraic rendition, Feynman obviously had to consider it), both geniuses want to get the idea that everything moves in right at the start, while Nelkon and Parker start with a static universe and describe things from there. Perhaps here we can start to understand what the magical crib sheet that the geniuses can see really is. It is a way of understanding the universe in its own terms, as layers of interactions and interlocking movements. Perhaps genius has more to do with a way of seeing, being familiar with the universe revealed by inductive thinking, than having a physically large brain that glows red hot as it performs vast numbers of deductive calculations - even though that is the way genius is often portrayed in our deductive, noun based culture. Perhaps we could all be geniuses if we could only forget the nouns and learn to see things the right way around.

The difference between a universe filled with layers of patterns that can be explored and understood using inductive thinking and one that does not contain this structure, has important practical consequences. For example, it enables us to understand what we are doing when we program a computer.

Computer programming is an activity that is hugely important to the human race. As we get better at programming, we can get machines to do more and more boring - and sometimes dangerous - jobs. That means we can carry on getting the benefits of mass production without human beings spending their lives doing soul destroying work in factories, or damaging their health in mines. The more we can organise and access information with tools like the World Wide Web, the more opportunities open up for vast numbers of people, and as they exercise their creativity, the more everyone benefits. Computers embedded in other machines give us performance cars and washing machines with greater fuel efficiency. Even electric shavers and drills have several thousand lines of program code in them these days, because it takes a lot of intelligence to charge modern batteries to their maximum capacity, and it's the capacity of the batteries that make modern cordless devices possible. The smarter we can make the machines we have around us, the more comfortable and useful our world becomes, and the more opportunities we gain. Computer programming offers us the ability to create machines of vastly greater complexity than ever before in history, as quickly as we can design them. And once designed, anyone can have the machines, by just downloading them from the Internet. So programming computers is very important.

People who have never programmed a computer might think that the ideas in this section are inaccessible or not relevant to them, but that isn't so. Exactly the same considerations apply to writing job descriptions for human beings doing factory or clerical work, and the issues facing the modern world are exposed in their most extreme forms in the field of computer programming. So to set the scene, we'll start with an example that doesn't involve any machines at all - just people. Imagine that a hotel booking agency wants to set things up so that:

1) Booking clerks can take bookings on the phone.

2) Booking clerks can cancel bookings on the phone.

3) Hotel accounts clerks can send bookings to hotels.

4) Hotel accounts clerks can send cancellations to hotels.

The wise manager will look at 1), and realise that the customer on the phone is talking to the business, not any specific clerk. So she'll write the clerk's job description to put each booking in a neatly organised central pile. The unthinking manager won't "waste time" with the complexity of a central pile. Instead she'll just tell each clerk to hold onto the completed bookings and invent another job - the bookings gopher - who goes round and collects the bookings from time to time, and delivers them to the accounts clerks.

When the wise manager looks at 2), she has no problem. All the clerks can be told to go to the central pile, find the booking that the customer wishes to cancel, and check that the details are correct. The unthinking manager has to make a much more complicated job description. The clerk who is taking the cancellation must check their own pile, then check all the other clerks' piles, then find the bookings gopher and check her pile, and maybe even go to the correct accounts clerk to find the booking that the customer wishes to cancel. The wise manager knows that saving a little in the wrong place can cost a lot elsewhere.

When the wise manager looks at 3), she defines a job description for the accounts clerk that involves remembering which booking in the central pile she has checked up to, and then looking through the new bookings until she has found all the new bookings that must go to the hotels she deals with. The unthinking manager seems to have an easier time with this one, because the bookings gopher just delivers bookings to the correct accounts clerk. This is the efficiency that she buys at terrible cost, dealing with requirements 2) and 4).

The real trouble comes in with requirement 4). It makes no sense at all for the agency to tell hotels about cancellations when the hotel doesn't even know about the original booking yet! Do that and the precious hotels are likely to get very annoyed! Again, the wise manager has no problem here, She just tells the booking clerks to add the cancellations to the central pile along with the bookings, and the accounts clerks won't be able to help sending things out in the right order. She's used the natural ordering of bookings and cancellations arriving at the switchboard to make sure that everything is delivered to the hotels in the right order. But the unthinking manager now has a huge problem. Depending on the route the bookings gopher takes around the office, the accounts clerks can actually receive the cancellations before they receive the bookings! Each accounts clerk has to be told to check every cancellation to make sure they've already seen the related bookings, before they send the cancellations to the hotels.

Both managers are getting the same job done. But the unthinking one has a team of bookings and accounts clerks plus a gopher, constantly interfering with each other, doing complicated tasks that are prone to error, and not getting on with the core business in order to do this. Responsiveness to customers who are trying to phone the agency goes down. And the more complicated way of doing it actually requires an additional worker, who (when all things are considered) actually makes work for everyone else, rather than reducing it. Because of this, workplace stress goes up, morale goes down, and absenteeism goes up. Costs go through the roof. Unthinking managers rarely look at the big picture, so when absenteeism goes up she starts handing out written warnings. This hits morale even harder, and the best staff (the ones who can move easily) start looking for other jobs. This further degrades customer service, and pretty soon the survival of the business is threatened. Most people have seen plenty of businesses in this state, and the state of most computer programs is just the same. Unthinking job descriptions lead to systems failures, whether the system concerned is a computer or a business.

The trouble with programming computers is that as a culture, we're not very good at it. When we started to do it, we ran into problems very quickly. The requirements for the first programs were written down, and people started working through the lists of requirements, writing program code (programs are just very precise job descriptions for machines to follow) to satisfy the requirements with features. As each feature was created, it started to interact with the other features that had already been created - just like the clerical workers in the example interfere with each other. So the programs grew bigger, to cope with all the special cases caused by interactions between features. Because every new feature interacted with all the previously created features, every new feature made the programs grow even more than the last one had. Even with this explosion of program size, the features kept on interacting in ways that the programmers had not thought of, and this caused the programs to fail in service. By the early 1960s, we seemed to have a new world of opportunity at our fingertips but we couldn't pick it up. The "software crisis" had arrived. To cope with the software crisis, people started writing down more and more rules for the programmers to follow when they were converting requirements into program code for machines to follow. These new rules were called "methodologies", and each one was announced as if it was the solution to the software crisis. Some of the new rules were put into computer programs for writing computer programs, called "tools". None of them ever worked. Programs kept growing bigger and bigger and bigger, failing, and eventually becoming completely unmanageable.

Even while most people and firms who wrote computer programs found themselves flailing around in complexity they could not control, there were some people who could be be twenty-five times more productive at programming computers than others, and managed to avoid getting tangled up in complexity while they were working. If that was true of some bricklayers, the building industry would film the super productive bricklayers to see what they do, and then teach the less productive ones to work the same way. But this does not happen in the computer software industry. The problem is that we can't make a film of someone thinking. Physical activity happens within a thinking person's body, but it isn't visible outside their body, and there are no superficial, kickable bricks for us to see being shifted around. To understand how the super productive programmers work we have to talk to them, and understand how they think.

The naturally effective programmers all share certain characteristics. They talk about something called the "deep structure", which is an interlocking network of relationships that are involved with whatever job they are doing. (This sometimes causes confusion, because people who study languages also use the term "deep structure", to mean something else. Programming "deep structure" is not the same as linguistic "deep structure".) The deep structure seen by programmers has objective existence. When two able programmers look at a problem, they both see - and can talk about - the same deep structure. When they talk about it, they have to use language in a poetic and flexible way, tagging bits of the relationships that they see with whatever word seems to be handy. The words they choose are usually quite "humble" words from practical, day to day experience, and other able programmers understand which bits of the deep structure of relationships involved with their job they are talking about when they use them. When they work, effective programmers regularly look at the code they have written, and see if they can "refactor" it, which means moving it around to remove duplicated bits of logic, so that they end up with one bit of program that is used many ways, instead of many bits of program that all do very similar things. Effective programmers also use "design patterns", which in the words of the architect Christopher Alexander (who inspired the way programmers understand design patterns), are arrangements that we "use over and over again, and never the same way twice". Effective programmers learn as they go. When they have finished a job, they are often able to tell their customers things that the customers never knew about their own businesses, because to make the computers work, the programmers have to think very carefully about what the business is doing, and they have the utterly merciless examiner of the mindless computer to test their work. The computer cannot exercise "judgement". It cannot "let people off". If the programmer has really understood the problem, the computer program will work. If the programmer has not understood, there will be a terrible mess. There can be no flannel, no sweet-talking, no obfuscation, no excuses.

Using the ideas already discussed in this chapter, we can easily see what has been happening to cause the software crisis. Programmers who try to follow rules to define rules are thinking deductively. They start with a statement of the users' requirements, and then they "run the fractal" in the generating direction, causing an explosion of superficial detail from the comparatively simple "rules" in the requirements. It doesn't matter what rules they follow to create rules, they can try a million different "methodologies", and will always end up with the same result. Whenever they think deductively they always run the fractal in the generating direction, and drown in the complexity they have created. On the other hand, the effective programmers are thinking inductively. They run the fractal in the compressing direction, using educated guesses followed by testing their guesses to find the hidden order in the users' complicated sounding requirements, and expose the underlying simplicity of the users' business. As depicted on the cover of one of the classic texts of computer science, they slay the dragon of complexity with the sword of insight. And the poetic language is mandatory.

So if there is a perfectly possible way to do computer programming, why do we have a software crisis? The trouble starts when inductive thinkers try to work within firms where most workers don't use inductive thinking, and are fixated on deductive, noun-based thinking in an unhealthy way. This fixation happens because the firms and most people who work for them exist within cultures which are fixated on deductive thinking, and avoid inductive thinking, for reasons that we will look at in Chapter 2. It causes terrible communication problems. People stuck in deductive thinking can't see the deep structure. They have the mental faculties for seeing it - everyone is born with the necessary faculties, which we share with all other animals on the planet. But in cultures where deductive thinking is dominant, the faculties are suppressed, and people actually start to feel uncomfortable if anything happens that starts to wake them up. So when two effective programmers start to point to bits of the deep structure that they can both see, but can't define in any other way than giving an identical example (just like the Native American legends), their deductively minded colleagues really don't know what they are on about, feel uncomfortable, and start announcing that the effective people are speaking poetically because they simply refuse to use the normal nouns! The deductively fixated people believe that the effective people are "immature" and have some sort of secret codebook which they share, and which they use to translate between the usual nouns and the "nonsense gobbledegook" which the effective people use. The idea that the effective people might be talking about something that can't be talked about using nouns because it's off in the realms of process, action and relationship is "unthinkable" to deductivists, because of their absolute conviction that there is nothing in the universe except superficial, kickable things, and simple procedures to be followed.

Refactoring the programs that have already been written also causes terrible communication problems between deductive thinkers and inductive thinkers. People stuck in deductive thinking believe that they should behave like some kind of mental meat-grinder, which has requirements going in one end, and program code coming out of the other. When each bit of code has been written, it should be frozen, and not fiddled with. The idea that they don't really know any of the requirements until they've seen all of the requirements, because only then can they perform the process of induction and see the hidden patterns (and one little sentence, like "New York office must be able to check the balance at any time" can change how everything is handled), just doesn't come into play. So deductive thinkers regard each bit of written program as finished, while inductive thinkers think of each bit as notes - a semi-digested work in progress - until all of the requirements have been considered. Inductive thinkers also know that the greatest challenges are in the details. We might think we can sort everything out in a "top down" kind of way, starting with quite general top level ideas, and then progressively refining the details, but inductive thinkers know that sometimes, just when we think we have nearly finished, we discover that there is a little detail that we just can't sort out correctly because the overall picture is wrong. So for inductive thinkers, every little detail is in fact a test for the overall design - and sometimes the overall design will fail the test. When this happens we have to go back (the jargon word is "iterate"), change the overall design, and work back down to the details again. So inductive thinkers always see everything as provisional until the whole job is complete. War breaks out because the deductivists see the inductivists trying to "fiddle" with code that is "finished".

The same problem has another effect in risk reduction. Whenever we set out to do a job, we try to reduce the risks that might stop us getting it done. For a person trapped in deductive thinking, the more requirements there are, the more grinding has to be done, and the more risk is entailed. There is a straight line relationship between the size of the requirements and the amount of risk. For an inductive thinker, there has to be enough material in the requirements for some sort of pattern to emerge, before there is any chance of getting the problem under control. As the requirements grow, the risk goes down, until there is enough information to do some serious problem collapse. After that point, risk does indeed start to go up again, as more incidental details start to clutter the big picture. But the deductivist verses inductivist wars are rarely fought in that part of the curve. The usual situation is that the inductivists are trying to get enough of the picture to start to make some sense of it, while the deductivists are fixated on reducing the number of requirements that have to be ground into features to an absolute minimum. Because they lack the faculties to make use of the bigger picture the inductivists want, they do not understand why they want it. Because they believe their own perceptions to be complete and perfect, the deductivists tend to get carried away and describe the inductivists' desire to make the problem "harder for the same money" as "completely insane and must be stopped". The way that less effective people use such language, and remain convinced that their more effective colleagues are not just incompetent but also mentally ill - despite their persisting in delivering the goods - is an indication that there really is a perceptual problem involved.

It's when deductivists get their hands on approaches - hints - that are helpful to inductivists and start treating them as instructions to be enforced and policed that the whole business descends into a kind of cruel farce. In their magnificent book "Design Patterns", Erich Gamma and his colleagues extended the thinking of the architect Christopher Alexander to software design. Alexander argued that all buildings must fit with the behaviour of the people who will live in them, as well as the practical realities of the climate and terrain that surrounded them. He advocated a circular process of construction, study and reconstruction which would allow the hidden patterns of relationship between all aspects of a building's use and constraints to reveal themselves. He asserted that by finding the patterns the architect could produce designs of great economy, beauty and functionality. He noticed that within the patterns found in each architecture job there were common forms, relationships that were used "over and over again, and never the same way twice". These included relationships between private and public spaces, the positioning of paths, the positioning of windows and doorways and sight lines produced by features within a building. He described some of these forms, because the more classic design patterns an architect knows, the more easily a new job will be decomposable into a harmonious solution made of those forms. All this makes perfect sense in a universe that is crammed full of patterns at all levels of abstraction, and applies as much to software design as it does to architecture. The only problem is, the designer has to be able to see the patterns in the first place. Where the designer is fixated on superficial kickables, there is no point being tooled up for a journey into the relationships between those kickables. In this situation, an instruction to "use design patterns" can only lead to an arbitrary and often ridiculous inclusion of random patterns for no purpose at all, coupled with a belief that because the instruction has been obeyed, the result - however hideous - must by definition be correct. It's like bolting an ironing board on the side of a Ferrari and asserting that the result must be perfection because we have "used components"!

The dispute between those who can only run the fractal in the generating direction, and those who can run it in the compressing direction extends into the individual's perception of their relationship to their work, and to the meaning of competence. For deductivists it is very important that they "know what they are doing". They know what they are doing even before they start work, and their approach is based on marching into a new problem area and telling it what it is - bashing it into pre-existing noun categories whether or not the problem fits in those boxes. To a deductivist, even attempting to learn from the job itself would be an admission of incompetence. A person who learns what needs to be done from the job itself does not "know what they are doing", cannot define the tasks to be performed before they start, cannot draw pretty little charts showing each activity they will perform to an accuracy of a few minutes, and generally does not carry on as if they are just going through the motions of writing a program they have written a thousand times before. And here lies the core of the cultural challenge presented by the Information Age. For several thousand years, first in the fields and more recently in the factories, a culture that blinds most people to the richness of what is going on around them, and makes them into little more than rule following industrial robots, has been able to conceal its own limitations from itself. While the definition of success involved repeating the same behaviour over and over again, people who could do that seemed to be very successful, and it wasn't necessary to ask why they seemed so successful. But this is no longer true. We no longer need to repeat the same behaviours to produce each copy of a computer program - or even each copy of a motor car now that we have computer controlled factories. For modern humans, knowing what we are doing does not mean rote memorising some simple physical actions to be repeated in a predictable way. Instead it means being familiar with the inductivist world of relationships that we must navigate if we are going to be able to command the computers to make the cars, the music, or the pharmacuticals that we want.

So in these terms it's easy to see why traditional industry has a "software crisis", and why it just keeps announcing more rules for solving the problem instead of studying the people who are twenty-five times more productive than most and passing on their skills. As demonstrated in this discussion, deductivists always end up describing the most useful workers in derogatory terms, because they cannot (and dare not) comprehend what they are doing. So rather than valuing those employees, the industry is cognitively constrained to pretending that they aren't productive at all, and announcing another batch of rules.

If all this is true, there should be no software being produced at all. The software industry should be impossible, yet vast numbers of people are employed in it. So what's wrong? The simple yet shocking answer is that no software is being produced, but there is a vast, global pretending industry! Think about it. For all the hysterical marketing hype, when was the last time you saw a new software product, instead of Version 8.9 of something that existed by 1993? Since we can manufacture existing software by copying it, we really don't care about old stuff. It just doesn't signify. What matters in software is the new stuff. In software, we should be producing new stuff, day in and day out, like traditional industry produced the same stuff, day in and day out. Yet since the early 1990s, the global software industry has been trapped in a state where fearful employees pretend to be engaged in performing robotic activities with ever faster repetition rates, rush into meeting rooms to discuss their ever more complex tool configuration issues, and frantically talk up their "genius", as evidenced by all the wonderful "complexity" that they have produced. But nothing ever comes out!

Until recently, it's been possible to conceal the non-productivity of the global software industry by blurring the issues, and falsely applying the standards of traditional mass production (same stuff every day) to the software industry (new stuff every day). Because the software industry can produce the same stuff every day without making any effort at all, it's been possible to hide what is going on. In traditional industries using manual labour, a worker who (for example) engraves a complicated pattern is more productive than a worker who engraves a simple pattern. In the software industry this is not true. A mindless computer can generate software complexity, but only real work by a conscious mind can find simplicity. And selling copy after copy of the same program for outrageous prices, as if the cost realities of traditional manufacturing industry apply - and they don't - has kept revenues flowing in, as Internet connectivity has been rolled out to over a billion human beings.

Even with those unjustified revenues, the whole thing has become a grand mirage, in which many global companies wandered off into the delusional state of sitting around in meeting rooms, creating notional value by selling each others' shares around in circles, and writing it down as profits. The software industry fell into the grip of a climate of fear, hysteria, evasiveness and reality denial which was captured by Scott Adams in his "Dilbert" cartoons.

After a brief period of fertility, during which the software wealth we currently enjoy was constructed by the minority of inductive thinkers in our culture, the deductivist bias took hold, and software production stopped dead. For 10 years, we have been standing at the threshold of the greatest explosion of wealth creation in the history of the species, but we cannot take the step to enter this era, because we are trapped in a cultural obsession with deductivism, and fear of inductivism. We have constructed industrial robots, but we can't make sensible use of them because we can't stop being industrial robots ourselves. The reason for this obsessive fixation with robotism will be described in the next chapter. If we can deal with it, we will be able to make full use of the reality of the fractal universe.

Going back to the example with bookings and accounts clerks that we looked at earlier, we can see that the current explosion of administration and bureaucracy that afflicts the modern world is just the software crisis as applied to job descriptions for people. It coincides with the appearance of the professional "line manager" who does not know anything about the work that she is managing, and concentrates on managing by following procedures. Unlike managers in days gone by, professional line managers do not regard themselves as responsible for whatever happens on their watch. So long as they have followed the rules, they feel that whatever happens - no matter how terrible the disaster - is not their problem. Since things were not this bad in the past, it's reasonable to say that the obsessive fixation on deductive thinking, and aversion to inductive thinking (which is the only kind of awareness that can allow a manager to look at the workplace and spontaneously think, "This is a right mess I've got here!"), is getting much worse. Indeed, it's got noticeably worse, throughout the entire developed world, since around 1990. We appear to be sliding down some kind of cultural plughole!

As we've just seen, the idea that the universe is stuffed full of patterns that we can deal with using our naturally evolved ability to do inductive thinking, but which we've lost sight of because of an unhealthy fixation on our new fangled ability to do deductive thinking, has implications for every aspect of human life. And as we've seen earlier in this chapter, these implications include some of the deepest questions in science. Now we shall turn this idea on the deepest question that science has looked at to date - and so far made no progress at all. This is the question of our own existence. What is the origin and nature of human consciousness?

In ancient times, this didn't seem to be much of a problem at all. By misunderstanding what the real magicians (including the various prophets who are credited with founding the major religions) were talking about, the religions had got the idea of a spook world, different to this one. The spook world was a different reality, with different rules, which could not be seen, and its existence had to be taken on "faith". Consciousness was a property of "souls", which were objects existing in the spook world, and according to some tellings, the souls were connected to bodies in this reality by invisible silver cords. According to other tellings, the souls were downloaded into bodies in this reality when the bodies were born, and uploaded back to the spook reality when the bodies died. The spook world, souls, silver cords and so on were important because people were really souls, and not bodies at all. Although the souls could not experience the spook world where they really existed because they were trapped in a virtual reality feed coming up the silver cord from this reality, they would be held accountable for what they had done in this reality, according to the rule system of the spook reality. While these tales did nothing to explain what consciousness actually is, they explained it away, by claiming that consciousness could not be explained by the science of this reality. We needed to understand spook reality science to understand consciousness, and that wasn't possible, because we don't have access to spook reality and so we can't do experiments on it.

Then a few hundred years ago, the spook reality started to fall out of favour. Isaac Newton had put mechanics on a firm footing, and people had made machines of great intricacy out of cogs. Then Charles Babbage planned the first digital computer, and his friend Ada Lovelace (the first computer programmer) had pointed out that as well as doing sums, this machine would be able to do anything that could be tackled by symbolic manipulation. And in a society that was unhealthily fixated on deductive thinking, that meant a computer could do all of thought. A computer could be conscious, just by grinding its cogs! In the 20th century the cogs were replaced first by vacuum tubes, then by transistors, then by processors containing millions of transistors. But the deductive function of the processors remained essentially the same as that of Babbage's cogs. According to the new thinking, the human brain contained neurons that worked by physical principles that made them equivalent to the cogs. So human consciousness could be explained in terms of the physical operation of structures within the brain. No spook reality, silver cords or similar were required. It all happened between our ears. At first, this approach produced plenty of useful results. Parts of the brain were discovered that behaved in predictable ways in response to sensory inputs, and careful studies of people with damage to bits of their brains made it possible to associate different kinds of awareness with different bits of the brain. These studies have brought great benefits. For example, speech therapists now have processing maps of the brain that enable them to help people who have had severe strokes. Today it's possible to give people exercises that will help their brains rewire themselves so that they can return to a full life, who just twenty years ago would have been doomed to a few months of suffering followed by death. But none of these advances have made any progress with the core question. What operation, of what structures, gives rise to our subjective sense of our own consciousness?

In the last few years of the 20th century, some thinkers led by the mathematician Roger Penrose started to seriously question the idea that consciousness can be explained by clockwork. Unfortunately they weakened their own argument by picking the wrong target, and asking whether a machine could ever be built that could be conscious. To many people who misunderstood what these thinkers were saying, this seemed to be a silly question. After all, however consciousness happens, it involves something that goes on in our bodies, what happens in our bodies is physical, and we don't need to make it just by the old fashioned method of having sex and waiting a few months. Our bodies are already physical machines, so the question of whether physical machines can be conscious has already been answered. They can. This though, was a misunderstanding of what the critics of the clockwork model were really asking. What the critics were really asking was whether a physical machine can produce consciousness by deductive, clockwork type operations. They made some very persuasive arguments that clockwork can't do the job, and then they did something very curious - they went out and brought back the spook world, complete with silver cords, unknowable realms and all the trimmings! They did it using "scientific" sounding language, talking about quantum mechanical effects so it wasn't obvious what they were doing - perhaps even to themselves. Yet the "quantum" spook world they invoked to explain the source of the mysterious consciousness was really no different to the "spiritual" spook world of the ancient religions, the quantum microtubules in the brain they suggested as magnifying the tiny quantum mechanical effects up to large scales are really no different to the ancient silver cords, and the famous mysterious reality of the quantum mechanical realm, inherently unfathomable by the human mind that they hid the origins of consciousness in, is no different to the unguessable physics of the "spiritual" spook world of the ancient religions. So although these thinkers certainly performed a great service by pointing out some inherent weaknesses of the clockwork model (and after all, the clockwork model had never come close to even starting to tell us where consciousness comes from), by returning to the spook world model they didn't actually move things forward at all.

When we look at the question again, from the point of view of a universe that must be understood as filled with multiple layers of interacting patterns, all over the place, a strange and interesting possibility becomes available, that science has not yet considered, and which does not require any weird stuff happening in different, spook worlds at all. All we have to do is remember that although we see fractal patterns interacting with each other wherever we look, we don't yet know of any single cause for all these interacting patterns. Something caused them, we just don't know what it is yet. Since we don't know what their cause is, we have no reason to assume any limits on how full of patterns the universe is, or how much they interact. Right now, as you read these words, you are bathed in data. The words on the page are just a tiny fraction of this data that you are consciously aware of at the moment. The rest of the data coming at you is held in smells entering your nose, air currents and temperature variations playing across your skin, the flickering of dappled sunlight as clouds pass across the sky, radio waves from distant galaxies that we usually detect by using radio telescopes, but which touch every physical object on this planet, the food you ate for breakfast including all sorts of additives, the patterns in the decoration on the wall in front of you, the movement of the carriage if you are reading on a train, traffic noise from nearby streets, birds singing, and so on. Gigabytes of data are entering your body every second, and gigabytes of data entered your body every second of yesterday, and the day before that, going right back to the time that you were gestating in your mother's womb. The important thing to realise is that all these gigabytes of data are found in a universe which (as far as we know) keeps everything arranged in fractal patterns. When we compare it with the fractally ordered data pouring into you every second of every day, the operation of the structures found in the human brain seems like a very puny source of data. If we just look at the data sources and try to guess where a mysterious effect that we can't explain is coming from, the data coming at us from outside our bodies (but physically part of this universe and therefore available to be studied) is a far more likely candidate than any clockwork operations occurring within the brain.