| [Last] | [Contents] | [Next] |

In Chapter 1 we saw that the new idea of fractal patterns can be applied to all of the universe around us, such that even things that aren't obviously fractals on the surface can be understood by the same approach that can handle fractals. This gives us the opportunity to rethink our prior understanding, and see fractal patterns as the underlying principle of everything around us. The idea that we can "run the fractals" in the generating direction with deductive thinking and in the compressing direction with inductive thinking then gave us a solid base for understanding that these two types of thinking are different, and that we need both. In Chapter 2 we saw how profoundly inductive thinking is missing from most human cultures, and this enabled us to re-interpret what is usually thought of as a form of mental handicap as people "falling out of step" because they retain their faculties when everyone else goes to sleep with their eyes open.

By understanding something of the way the magicians see the universe - as fractal patterns - we have found our way to understanding why the magicians think that most people in most societies don't see most of what is really going on around them. It's because their inductive thinking ability is asleep. Now we will complete the picture of how the magicians think differently to most people, and this will make it possible to understand more of how they see the universe.

We evolved our ability to think deductively as a special tool, in addition to our ability to think inductively. Most people's loss of inductive thinking because of mass boredom addiction is a very recent thing (in evolutionary timescales), which has left the deductive kind of thinking trying to run things on its own. The trouble is, when we do deductive thinking on its own we inevitably fall into a deep and subtle error, that makes many of the logical conclusions we reach profoundly wrong. Everything seems to check out as perfectly logical - there don't seem to be any errors in the reasoning at all. And in it's own terms, the reasoning really is correct. The error happens because when we lose the background that inductive thinking should provide, it makes whatever we are thinking about deductively become it's own background. An example is the best way to explain how this can happen.

CYC is an ambitious project to make computers more intelligent by creating a vast network of true facts, all linked together. The idea is that when we read something written by another person, we use lots of little "common sense" facts that we know to make sense of what we read. If we want computers to read news stories or scientific reports and tell us about the ones we might be interested in, perhaps the computers need to know all the little "common sense" facts to make sense of the stories. So the people building CYC have spent years telling CYC little facts. At night they leave the computer running, looking through the facts it knows, and trying to find connections. In effect they try to get it to deduce things from the facts it already knows. One morning, they came in to find that CYC had printed out this remarkable claim:

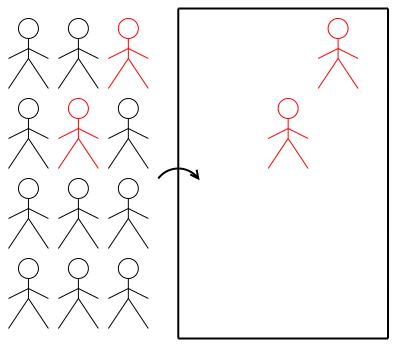

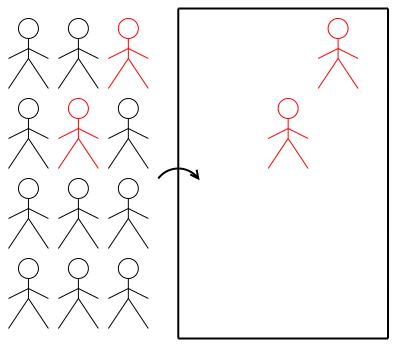

Of course it isn't true, which is not to say that Julie who runs the local shop or Sam who organises the school trip, or Terry who restores antique furniture aren't important. They just aren't famous. So why did CYC deduce (in its unconscious, machine way) that they are? Eventually the researchers realised what the problem was. They'd told CYC about many, many people, and all of them were famous! CYC didn't know that for every "Albert Einstein" who is famous, there are thousands and thousands of Julies and Sams and Terrys who aren't famous. Without the background of Julie and Sam and Terry, Albert Einstein had become his own background, and CYC seemed to be in a world where everyone is famous. CYC had got itself logically inside out. No matter how carefully the CYC program checked the logic, it could never find the flaw - but it would still be wrong.

The deductive mind's problem happens because it works like CYC. It has to select the elements of anything it wants to think about and represent them in its own internal thinking space, where it proceeds to push them around. Inductive thinking works differently. It evolved to take everything available to the person's senses and look for patterns in the whole. So when a person has their inductive thinking ability working together with their deductive ability, it's natural to remember that the elements that are being pushed around have been taken out of their real context, and always bear that in mind in every stage of thinking. The deductive mind never developed the ability to maintain its own grounding in the context of whatever it's thinking about because the inductive mind always took care of that. With a mind that's an integrated mixture of induction and deduction, we are prevented from making mistakes that the deductive mind working alone can't see.

This error is something which every person trapped in deductive thinking must make over and over again. Even worse, the kind of thinking this creates means that the whole culture ends up making the same logical error. Unlike boredom addiction, where it's possible for some people to have a fortunate genetic immunity and retain their full faculties, everyone in a society where inside out thinking is common is in danger of being tricked. The effect is so powerful and so hard to spot that people keep on making mistakes in what they are convinced is logical thinking, and can't see where the problem is, even when they can see that the result doesn't match what happens in reality. This is why inside out thinking needs a chapter of its own to explain where the problem is, even though at root it's just a consequence of most people being caught in deductive thinking, which we've already looked at. The good news is that once people get the hang of what goes wrong and start to break free of robotic fixation, they naturally start to remember context and see new possibilities or solutions to problems opening up, that they didn't notice before. Unlike boredom addiction, there's nothing like withdrawal stress locking inside out thinking into place.

Having seen how the CYC computer made its silly mistake, we can look at two classic errors where humans do exactly the same thing. The first one still puzzles mathematicians (the very people who were so surprised when Kurt Godel proved that deductive thinking can't do everything). Imagine you are told two facts, like this:

1) Some liontamers are women.

2) Some women are redheads.

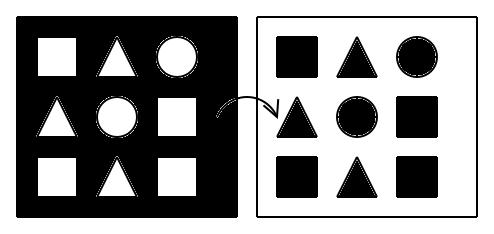

The question is, are there any redheaded liontamers? People usually think about two possibilities (and if they've ever done sets at school they even draw the actual diagrams shown below):

They look at the possibility on the left, and decide that there is no reason, from the information available, to assume that the set of liontamers and the set of redheads don't overlap like the diagram shows. So that can't be correct. Then they look at the possibility on the right, and decide that there is no reason to assume the two sets do overlap either. So they say that the answer is "undefined". The problem as stated doesn't allow them to answer the question. And that, most professional mathematicians would agree, is the correct answer.

If we bear in mind the trap of inside out thinking, we find that there is actually another possibility, which no-one ever thinks of. The deductive mind is a control freak. Like CYC, its awareness is completely limited to its internal thinking space, and it only knows about things that it has copied into its internal space. Like CYC, it has complete knowledge of everything in its internal space, and it doesn't know that anything except its internal space exists, so it thinks it knows everything! This is the error we saw Ouspensky and his fellow students making in the last chapter. The deductive mind doesn't really know everything of course - in fact it hardly knows anything. So from the outside we see that it only pretends it knows everything, and also is so silly that it thinks anything it doesn't know about doesn't exist, or doesn't matter. So to indicate its perfect knowledge of all things, it has to draw see-through circles for the three sets - showing that it knows everything about every little corner of its internal universe - and has the problem of deciding if the set of liontamers overlaps the set of redheads before it does anything else. Both of the choices using see-through circles actually misrepresent the information available, which doesn't say anything at all about the relationship between liontamers and redheads. Let's draw the diagram in a more accurate way:

Now we've shown that although the universe certainly knows if it has any redheaded liontamers in it, we don't know, because that information is hiding behind the set of women, which we can't see into from the information given. This more accurate diagram allows us to give a slightly (but importantly) different answer to the question. We can answer "possibly".

What's the difference between "undefined" and "possibly"? It's that "undefined" tells us absolutely nothing, while "possibly" tells us that we do possess some information about the situation, and so far there is nothing to tell us that there aren't any redheaded liontamers. After all, if our total knowledge consisted of the contents of this box:

We would not know that liontamers do exist, we would not know that redheads do exist, and we would not know that there is a possible meeting ground between liontamers and redheads, because some members of both groups are also women. If we want to meet some redheaded liontamers, that's quite a lot of information - and so far, all of it is positive. We're like a football team that's reached the semifinals. We haven't won the season yet, but our chances of winning are better than they were when we started. Since we started with no information at all and our chances of meeting redheaded liontamers at the start were "undefined", our chances now that we've got some information and the possibility still exists must be something better than "undefined". Because the deductive mind needs to work in its own internal thinking space and can't deal with an open situation like the inductive mind can, it has to pretend that everything in that space is concrete and well defined. By being greedy about certainty in this way, it actually ends up throwing away useful information. This is a habit that is deeply ingrained in deductively fixated culture, which is why you've never seen anyone draw a set diagram like the one above, with the set of women hiding the relationship between the sets of liontamers and redheads instead of joining or not joining them.

Some people who are not trapped in deductive thinking and naturally live in the universe that their inductively capable minds are showing them, look at people doing deductive thinking in this wrong, inside out kind of way, and come to the conclusion that logic is bunk. They're out looking for the redheaded liontamers and sometimes finding them, while the "logical" people are sitting at home, quite convinced that there is no point even trying. Dismissing logic like this, just because some people do it wrong, is itself an error. The whole universe is logical. It all fits together, and we evolved the deductive mind to make use of this fact. When we recognise the error of inside out thinking, we have to be careful to only throw out the error, and not the good stuff. This is why George Gurdjieff always talked about developing the human mind as "perfecting our Objective Reason", and Rudolph Steiner (a magician best known for founding the Waldorf School movement) warned about the danger of developing inductive thinking without developing deductive thinking at the same time, and so becoming fuzzy brained "one sided mystics".

In the example we've just looked at, inside out thinking confuses people's ability to make judgements based on some simple facts because the deductive mind acting alone has trouble understanding that the symbols it has in its internal thinking space represent only partial knowledge of a different, external reality which is complete. To get a sense of how tricky this error can be, we can look at another puzzle (it's great fun to try this one on people because they always get it wrong), and then look at how the error has led to some serious miscarriages of justice.

Imagine you're a TV gameshow contestant. You've reached the final stage of the show, where you try to win the big prize. The gameshow host stands you in front of three doors:

You can't see what's behind the doors, although the host can because she keeps running round behind them. The host explains that there's a car hidden behind one of them (we assume you want a car), and lemons hidden behind the other two (we assume you don't want a lemon). You have to pick a door, and tell the host which one you've picked. Let's say you pick door A. The host then chooses and opens one of the other doors, to reveal a lemon that has been hidden behind it. (Because the host knows what's behind all three doors she can always choose one that's got a lemon behind it.) Let's say she opens door C:

Now she gives you a choice. You can either stick with your original choice of door A, or you can switch to door B, which is the other door that remains unopened. After you've decided, the host will open the door you've settled on, and whatever is behind it, is yours! The question is, should you stick, switch, or doesn't it make any difference?

People always think that it makes no difference, and that's the wrong answer! When you stick with door A you have a 1/3 chance of winning the car, but if you switch to door B you have a 2/3 chance of winning the car, which is twice as often! Let's look at why this is so, and why the confusion between what the universe knows and our incomplete knowledge leads people astray. When you pick a door at first, you have a simple 1/3 chance of picking the door that happens to have the car behind it. You know no more than that, and you can't do any better to improve your odds than just picking at random. When the host opens one of the other doors though, she knows something you don't. She knows exactly which door the car is behind, so she can always choose another door with a lemon behind it to open. When you then decide to stick or change, you are actually faced with a different problem to the one you started with. In the first problem, only one out of three doors had a car behind it. Your first choice is from a collection of two lemons and one car. Then the host removes one lemon from the problem. After she has opened one of the doors, there is only one car and one lemon left in play. So you can never switch from a lemon hiding door to another lemon hiding door. All you can do is switch from a car hiding door to a lemon hiding door (if you happened to pick the car in your first choice), or from a lemon hiding door to a car hiding door (if you happened to pick a lemon in your first choice). Since you had a 1/3 chance of picking the car but a 2/3 chance of picking a lemon in your first choice, you end up with a 1/3 chance of switching to a lemon and a 2/3 chance of switching to the car!

Some people - particularly people with strong technical backgrounds - find this result impossible to believe. So if you're one of these people, try it for yourself. Here's a simple C program that you can use to do this:

///////////////////////////////////////////////////////////////////////////////

//

// gameshow.c - simulate gameshow problem when contestant always switches.

//

///////////////////////////////////////////////////////////////////////////////

#include <stdio.h>

#include <stdlib.h>

main()

{

int CarBehind;

int MyFirst;

int Eliminated;

int MySecond;

int Play;

int Win;

int Lose;

Win = Lose = 0;

for(Play = 0; Play < 3000; Play++)

{

// Position the car.

CarBehind = rand() % 3;

// Make initial door selection. Under Linux rand() can be used like this.

MyFirst = rand() % 3;

// Eliminate a door.

if(MyFirst == 0)

{

if(CarBehind == 1) Eliminated = 2;

else Eliminated = 1;

}

else if(MyFirst == 1)

{

if(CarBehind == 0) Eliminated = 2;

else Eliminated = 0;

}

else

{

if(CarBehind == 0) Eliminated = 1;

else Eliminated = 0;

}

// Switch doors.

if(MyFirst != 0 && Eliminated != 0) MySecond = 0;

else if(MyFirst != 1 && Eliminated != 1) MySecond = 1;

else MySecond = 2;

// Add to Results

if(MySecond == CarBehind) Win++;

else Lose++;

}

printf("%d wins, %d loses\n", Win, Lose);

}

So what goes wrong here? The problem is that the deductive mind sets up the initial problem in it's internal thinking space, which it then treats as "reality", instead of as a partially formed picture of external reality. When the gameshow host adds information to what the deductive mind has by revealing the location of one of the two lemons, the deductive mind doesn't recognise that as an opportunity to improve its picture of external reality. The key issue is that since the deductive mind hasn't pushed a car symbol around, it doesn't believe that the car has "moved", and it can't take into account that the information it started out with about where the car is has just been improved. So to maintain its "grip on reality" it has to insist that there is no point in switching. Because the deductive mind sets its own internal thinking space up as reality, it can't trust the true, external reality to be completely consistent and rely on that fact when it traces what is going on through its own areas of ignorance - which is a point we'll come back to later.

Sometimes the effects of this kind of error can be very serious indeed. Cheap mass DNA screening is now used to hunt for sex offenders and murderers, and has exposed a serious confusion which the legal system has not yet managed to cope with. Imagine you are on a jury, and you've heard the following evidence: A horrible crime was committed, and the forensic scientists found a DNA sample. DNA profiles are very specific (although they don't compare every single gene), and only one person in a million will fit a given profile. The police then mass sampled lots of people, and found a man who fit the DNA profile. There is only a million to one chance of error, and so he is guilty. Would you convict him?

Most people say yes, and people are currently in prison for this reason. But the logic is completely flawed. The problem is that there is no other evidence to link the man with the crime. The police just sampled and sampled until they found one person who matched the DNA profile. So the question isn't about all the people who don't fit the profile. It's about the people who do. If there are 50 million people in the country, there will be 50 people who fit the DNA found at the crime scene. If we just keep sampling until we find one of them, we have one chance in 50 that the person we find first happens to be the criminal. In 49 cases out of 50 we'll just pick up some other unlucky person who happens to fit the DNA profile. So instead of having one chance in a million of being wrong, we actually have one chance in 50 of being right! When the deductive mind looks at the argument in the isolation of its internal thinking space, the prosecution's argument seems very sensible. By itself the deductive mind won't step outside of that closed internal space and wonder about all the other people in the real situation of a country of 50 million people, in which the odds presented in court get switched inside out by all the other possibilities that the prosecution never mentioned.

A similar problem is seen in cases where mothers of babies who have suffered cot death are accused of murdering them. In several cases reputable doctors have given evidence for the prosecution that the chances of a cot death are very slight, so the chances of two babies suffering cot death in the same family are so slight as to be ignored - and so the mothers must have smothered the babies. In fact there's solid scientific evidence that although the cause of cot death is still unknown, there is some genetic or environmental factor that makes some families more likely to experience this terrible tragedy than others, so it isn't like throwing dice, where each throw is completely independent of every other throw. If a family has suffered cot death once, the chances of it happening again are significantly greater. A second death does not mean the mother must be a murderer.

This problem became even more worrying because of a case in the UK in 1996, when the defence attempted to explain the logical error to the jury. The mathematically correct way to cope with statistics like this is called Bayes Theorem, after Reverend Thomas Beyes, who discovered it in the 18th century. Reverend Bayes idea says (in a formal mathematical way) that we can start out with our best guess at what will happen, but that as we learn more, we have to adjust the probabilities that we guess for a thing happening or not. We have to be like the gameshow contestant that learns from what the host does, and not like the contestant who starts with an amount of "knowledge" which doesn't change as more data arrive. We always have to be aware of the context of the knowledge that we have. This caused a problem in court, because the correct answer, and the wrong answer that the inside out deductive mind working on its own tends to produce are different. The judge ended up telling the jury that when they think about statistical, scientific evidence, they must not use mathematics, but instead they must use something he called "judgement". In effect, he said that mathematics has nothing to do with science, that they mustn't use reason, and must instead give in to the error because it feels right!

We've seen how the deductive mind working on its own can make serious mistakes because it ends up confusing its understanding of reality with true reality, and this means that it can get its sums wrong. This is not the worst effect of inside out thinking. Things get worse when the loss of context causes people to see everything around them in a dark and confused way. This means that the universe that most people see is a much less interesting, fun and opportunity filled place than the universe the magicians see - and this is something that the magicians are right about.

Every relationship between everything found in the real universe exists by default. Things in the real universe don't need to have special provisions to make their relationships possible. Simply by virtue of existing in the same universe, things have the opportunity and context to relate to each other. For example, every bit of matter in the entire universe exerts a gravitational pull on every other bit. Right now, your feet are exerting a graviatational pull on your nose, and both of these parts of yourself are gently tugging on the Eiffel tower in Paris, the Taj Mahal in India, the Moon and the stars Rigel and Sirius. Meanwhile, Rigel and Sirius are tugging on each other. We still don't understand how this happens, but it does. Scientists talk about gravitational fields, but all that does is explain what they see happening - it doesn't explain how it happens. Real space is somehow an active medium, which connects everything without anything else being required to allow the connection. In real space, the exceptions are situations where connections are not possible because special measures have been taken to stop the connection being possible. For example, if there are some chickens in a coop and a fox in the woods, the material substance of the coop will prevent the fox getting to the chickens. For another example, if we don't want two electrical wires to make a short circuit we must seperate them with an insulator, to prevent a connection from occuring by accident. Science is about discovering the connections that are going on all on their own without any other help or permission required, and we are always finding fascinating connections between things that we never realised were going on. The internal thinking space of the deductive mind isn't like this. It isn't an active space which enables everything to relate to everything else by default. It's a passive space which things can get transferred into, and which then just hang there, not connected to anything else unless we make special provision to assume that a connection exists.

In reality relationships exist by default, and do not exist if special provisions are made to stop them. In the internal thinking space relationships do not exist by default, and exist if special provisions are made to enable them. So when we take the things that we see in a part of the world and copy them into the internal thinking space of the deductive mind, we turn the whole picture inside out. Because we turn it all inside out at once, we are left with a consistent picture. We can do all the logic we like in the inside out space, and however we check our deductive thinking we'll find no errors. It's just that when we compare our results with reality, we find that what we expect just isn't what happens! The problem is that in our deductive thinking we've done stuff that ignores the context of active real space. When the inductive mind is turned on and has its own correct function, we just wouldn't have let the deductive part of our minds make that kind of mistake in the first place. It's a sneaky problem though, because when they are brought up in a culture that does logic in a deductively fixated way, even people with their inductive minds turned on learn a kind of "thinking" that doesn't use the inductive mind to run a constant sanity check on what the deductive mind is doing. Instead of using both parts of their minds together in an integrated and direct way, they effectively stop using their minds and engage in a robotic, computer-like symbol pushing activity they call "logic".

One consequence of how this turning inside out of absolutely everything catches people is found in how they identify mutually exclusive and mutually inclusive opportunities. It's something the entire culture consistently gets wrong! For example, every time anyone does the sums, they discover that improving industrial quality and reducing costs go hand in hand. The two activities are mutually inclusive. When we improve quality we reduce wastage, improve worker morale, reduce production times, we don't have to cope with returned faulty goods, we spend less on marketing promotions (because the stuff sells itself) and so on. Improving quality always decreases costs. Yet over and over again, people's automatic reaction when they have to cut costs is to reduce quality. They switch the mutually inclusive relationship to a mutually exclusive one, and then extend the faulty logic to the conviction that all they need to do is reduce quality and costs will surely come tumbling down! In recent years, a similar mistake has convinced some people that so long as they are damaging the environment, they must be making a profit, leading to a bizarre kind of anti-environmentalism for its own sake!

On the other hand, at every election we see another batch of politicians promising to reduce taxation and increase public spending at the same time. Everyone knows this just can't add up because the two options really are mutually exclusive. The reason that politicians do it is they know that despite all reason to the contrary, people will actually fall for it. At heart, people don't believe that reducing taxation and increasing public spending are connected in a mutually exclusive way.

This really is a deep logical effect, and not simply sloppiness. We can see this by considering the kind of logic that engineers use to create logic circuits, as used in all sorts of gadgets. When we think about things "logically", we use the basic relationships AND and OR to connect ideas that we think of as TRUE or FALSE. Everything works fine, everything makes sense, until we want to create little electronic circuits to represent these relationships. Then suddenly, we find that nature doesn't seem to want to play by our simple rules! Instead, what engineers find they can construct most simply are two related operations they call NAND and NOR. These are the same AND and OR relationships that humans use, but with the results negated, so a TRUE result becomes FALSE and a FALSE result becomes TRUE. Except that the way nature does it, a NAND isn't made from an AND with a extra component to turn it inside out - it's the AND that's made from what we call a NAND with an extra component to turn it inside out! Just like in the diagram above, we have a consistent way of doing things, and so does nature, and the one is the inside out reversal of the other!

Sometimes this business of turning things inside out because we lose the context as we copy into the deductive mind's internal thinking space produces some very odd results that just don't match what nature does. When this happens, people tend to cope with the discrepancy by pretending that nature does what their "logic" says it does - and carrying on regardless. An example is the application of what is supposed to be a Darwinist philosophy of free market economics as practiced in Europe and America. The Darwinist idea is that living things (and in the analogy businesses too) compete, and in the competition, the strongest survive by wiping out the weaker examples. People who believe this point to animals preying on each other as a justification for their philosophy, but then they have a problem. What tends to happen in this model is that the economy starts out with a diverse range of businesses, interacting with each other to create a diverse range of products for their customers - a business ecology. Then during competition, more and more of the businesses die off until only one monopoly (or artificially maintained duopoly) remains. This dinosaur then becomes slow and bloated until it is finally killed off by the evolutionary catastrophe of some new technology coming along which the slow and bloated business is unable to adapt to. The new technology is adopted by a number of new businesses and for a while the customers enjoy choice and service again. Then the cycle repeats. The Darwinist rationale for setting things up this way is that this occurs in nature. Except it doesn't! What really happens in nature is that ecosystems start simple and over time they get much more complex. We start with a ball of rock swinging through space and end up with the Amazon rain forest (or at least we do until business models supposedly inspired by nature deforest it). So what's gone wrong? Why does competition do one thing in nature and another thing in business? The trick is to recognise that although the deductive mind is correct when it copies incidents of competition from the active real space into its passive internal space, it fails to identify the interacting co-operation which is constantly occuring between all elements of the real ecosystem because all the elements are connected by the active space that they share. Animals keep breathing in oxygen and breathing out carbon dioxide. Plants keep doing the opposite. Every element of the ecosystem interacts with all the others in vast numbers of ways, and this provides a co-operative context in which the isolated incidents of competition take place. This co-operative context does not get copied into the internal space of the deductive mind, which ends up seeing competition occuring without any context. That is then the natural "model" that humans seek to emulate, and in the resulting state of total war, it's hardly surprising that desertification of the business ecology happens very quickly indeed.

As the deductive mind acting alone flips inclusive and exclusive relationships, it also converts the open possibilities of the real universe into closed possibilities. In reality, win - win relationships are the ecological norm, but in the internal thinking space of the deductive mind, every winner implies a loser. That's why after they've decided that quality and economy are mutually exclusive, people go on to think they can control economy by reducing quality. If quality loses, economy must win, because in the closed world, there's a winner for every loser. This can lead to people thinking in negatives to a quite remarkable degree, without ever realising what they are doing. For example, very aggressive businesses that are trying to apply what they think of as Darwinistic reasoning usually think that they are being selfish - and if they really were being selfish there wouldn't be a problem. The trouble is, they aren't being selfish at all. They are really being anti-altruistic. Anti-altruism is the inside out version of selfishness, which works on the assumption that if no-one gets a bean without paying for it, the company must do well. On the other hand, quite sincere people who want to be altruistic end up getting caught in the trap of anti-selfishness. This is the mistake of thinking that if we deny ourselves then we must be benefitting others, and it's as big a mistake. What is particularly sad about this example is that in reality, where everyone is connected by active space, it isn't possible to improve our own environment without improving everyone elses' as well. This is what every plant and animal in the rain forest unconsciously practices. In this sense, altruism equals selfishness. On the other hand, the business that screws its cusomers to the point where they'll do anything to find another supplier has nothing in common with the charity worker who flogs herself around in a frenzy of self denial but never actually accomplishes anything useful.

The same pattern of "two wrongs don't make a right" can be seen in the ages long dispute between people who think they are rational and people who think they are spiritual. In fact, many people who call themselves rational actually police an attitude that is anti-spiritual. To them, rationality is what is left after everything that they don't know a causal mechanism for, every holistic style of thought, and every poetic sensibility has been discounted. A particularly unfortunate example of this was some people's reaction to James Lovelock's Gaia Hypothesis. Lovelock observed that an interesting property of the Earth's ecosystem is that it is out of chemical equilibrium, and stays that way. In this it is the same as any single animal, which stays out of chemical equilibrium throughout its life. It's only when the animal dies that a process of decay sets in, and a chain of chemical reactions occur which eventually stop when chemical equilibrium is reached and decay is complete. In order to maintain itself out of chemical equilibrium, the living animal is composed of an interlocking network of active systems which compensate for the changes thrust upon it by its environment, keeping it in a stable state which is not chemically stable. From this reasoning, Lovelock argued that because the Earth's ecosystem has survived for millions of years while being impacted by meteorites, and subjected to variations in the amount of energy reaching it from the sun, it too must be composed of interlocking active systems that compensate for changes. Although we don't yet know what they are, we can deduce that these systems must exist, and so go looking for them. In this way we can greatly increase our understanding, which is the purpose of science. Lovelock's thinking was science at its best. Starting with an overall, holistic, poetic impression of what must be happening, he saw a way to structure detailed scientific enquiries. To communicate his new idea in a simple way, he accepted a suggestion made by the author William Golding and named the idea after the Greek mother goddess, Gaia. This was enough to drive a generation of deductivists demented with ideological rage. It was a poetic, holistic, creative concept. Producing it required a spontaneous recognition of a simple yet profound truth. It was as repugnant as anything could possibly be to people trapped in robotic, reductionist, deductive fixation. Nearly 40 years on, a new generation of biologists, geologists, meteorologists and other specialists have grown up with the Gaia Hypothesis in play. They have not been faced with the trauma of the idea's introduction, and indeed are busy mapping out the interlocking active systems that maintain the Earth's ecosystem out of chemical equilibrium.

On the other hand, many people who call themselves spiritual are actually anti-rational. To them, spirituality is what is left after everything that the deductive mind can handle has been discounted. Parallelling the example of anti-selfishness and anti-altruism, rationality and spirituality are completely compatible (as this book shows in great detail), but anti-spiritual people will never find common ground with anti-rational people, because both groups are thinking in negatives and so are dead wrong.

In the same way, there is a huge difference between not doing anything wrong (the principle concern of most people in work or legal contexts) and getting things right (which satisfies customers and leads to profits). It is the cultural fixation on the inside out issue of not getting things wrong that leads people to avoid problem ownership, so the problems just sit in the middle of the floor, getting worse.

The problem of lost context fuels an unfortunate trait of the deductive mind acting alone, to experience false senses of fear and security. The chattering mind wouldn't be so bad if most of what it chatters about wasn't such complete nonsense! False fear occurs because the deductive mind acting alone doesn't really believe that anything else exists outside the narrow confines of its knowledge. People stumble into boring life situations purely by chance, and get stuck there - because the idea that there might be anything else they could do is then inconceivable. They forget that if they'd happened to go for the washing up job instead of the car park attendant one, they'd still be alive, still be doing things, but they would be different things. So they get stuck in the car park until a hurricane comes along and knocks it over. This same closed world also explains many people's strange unwillingness to experiment because of fear of failure. A rational concern about spending scarce resources unwisely is one thing, but fear of failure in itself is very odd. When we try something new we can either succeed (great) or not succeed (hey ho, and we've learned something new). But in the closed world of the deductive mind acting alone it doesn't seem that way. There is either success which is good, or failure which is the closed world alternative - bad. So just like the "logical" people who won't go looking for readheaded liontamers because they aren't certain to find them, people trapped in this way needlessly deny themselves opportunities for success, even when they are zero cost.

False security comes from the mistaken belief that the only possible dangers are the ones that people are conscious of. Because they've copied some specific dangers that they already know about into their internal thinking space, they come to believe that all they need to do is make explicit provisions to deal with those dangers and they will be safe. This leads to a false sense of security. A good example at the moment is the growth of compulsory drugs testing in the workplace. Before this fashion caught on, managers used to monitor their employees' work. If the work deteriorated, the manager would investigate and find out why. Perhaps the employee's health had deteriorated or she had family problems and needed some compassionate leave to intelligently return her to full effectiveness. Perhaps an underappreciated member of staff had left and her remaining colleauges had found themselves snowed under (that one's amazingly common, because no-one on the ground will ever admit to it). Perhaps the needs of the customer base had changed and more training or resources were needed. And perhaps the employee had a drugs problem. Quite apart from the totalitarian aspects of compulsory drugs testing, the perception that the only thing an employer has to watch out for is employees using drugs in their own time, breeds a dangerous sense of complacency. The same thing happens with computer system security, where it really doesn't matter how many passwords a system has if the latest security patches haven't been applied to the web server, or the corporate network hasn't been designed to be robust in the face of successful attacks, and with airport security where the number of troops walking round the departure lounge with automatic weapons doesn't count for anything if thousands of maintenance workers are entering and leaving the hangers unchecked every day. Unfortunately in situations like this, the totalitarianism and lack of imagination of herds trapped in deductive thinking works together with the trap of losing context, to create situations which are as useless as they are invasive of basic human rights.

Because the deductive mind working alone turns its pictures of reality inside out in such a profoundly consistent way, discussions between people trapped in deductive thinking and people who are magically aware can develop a surreal tone, since for every idea possessed by one group, there is a distorted but related idea possessed by the other group. Worse, the distorted ideas that make up both groups' pictures of reality are related to each other in distorted but related ways, and both groups even use the same words to refer to the inside out versions of the same ideas and relationships. It's possible for members of different groups to have a conversation in which each believes the other to be understanding what they are on about, without any communication taking place at all! A wonderful example of this problem is the dualism of the word "dualism".

When peope trapped in their deductive minds use the word "dualism" they are usually referring to a mistaken belief that they have, that magicians believe there is one material universe full of kickable things, and there is also a second, different, spook alternate reality, connected to this one by silver cords or whatever. That makes two universes (they figure), so they say the magicians are "dualists" - believers in two universes. Of course the magicians believe no such thing. Instead they believe that there is this, single material universe, which contains kickable things that are arranged in patterns which are also found in this material universe. Where else could they be? The magicians believe that the patterns are "more material than material itself" - that the patterns govern the kickables to the extent that the kickables are like shadows of the patterns, and the material properties of the material universe are found in the patterns rather than the superficial kickables. It's because the people trapped in their deductive minds can't see the patterns that they assume the magicians are talking about... like... somewhere else.

When people who are magically aware enough to not be trapped in their deductive minds, and magically educated enough to know it, they also use the word "dualism" to refer to the trapped people as believing that they are distinct from the universe. This comes from the way that trapped people have to copy from the reality around them into their internal thinking space. Everything gets dragged over a boundary before trapped people can think about it, and the boundary then acts to cut the trapped person off from the rest of the universe. The magicians see the trapped people as peering out at a universe that is different to themselves, which they "observe" and sometimes "struggle" in, but that they are not an integral part of. The magicians see the trapped people as believing that they exist, and the universe exists, which makes two things.

This causes no end of confusion. "You're a dualist", says one group. "Oh yeah", says the other group, "Well you're a dualist too!". Same word, very different ideas, but related in a twisty, inside out kind of a way. Things get even worse when each group tries to communicate with the other by trying to adopt the other's language and calling themselves "non-dualists". It's worth being aware of this because the example of the dualism of "dualism" happens with lots of other words too. Take "alertness". To a teacher trapped in the robotic extreme of boredom addiction, alertness is actually the same as "robotic" and is used to indicated a desirable state, while to a person free of boredom addiction it means "aware of all the surroundings" and is used to indicated a desirable state, but the two meanings are exactly opposite! It's also worth being aware of this because dualism (in the way magicians use the word) has mind warping effects in addition to the other ones we've looked at already.

People get ever so defensive of the boundary between themselves and the rest of the universe. Data that is already inside the boundary is "true", while data that is not inside the boundary is "not true". This is why a major problem with professional line managers is that they will deny the truth of all warnings of impending problems until a disaster occurs, at which point they protest that they were not told. In some circumstances, this kind of denial coupled with avoidance of problem ownership can lead to problems being "dumped down" onto people who do not have the authority or resources to deal with them. This causes unavoidable stress for the people concerned, which is only made worse if the people concerned actually care about their work. In the United States Postal Service, these stresses led to employees taking firearms into the workplace and shooting their colleauges so often that the phrase "going postal" entered the language.

The concern with boundaries that never existed in the first place appears in debates about the relative effects of "nature and nurture". What really happens is that the universe starts with some empty space, and starts to pour information into that space. The first information arrives coded into base pairs strung along some DNA, then later information arrives in the molecular arrangements of "food" particles, additives in the "food", trendy Vivaldi that's played at the foetus, radio waves from distant galaxies and overhead power lines, episodes of Buffy the Vampire Slayer and even (possibly) a bit of "education". It's all information, terabytes and terabytes of information, contained within the universe, focussing into a particular place and piling up. So why draw an arbitrary boundary around the first information to arrive and call that "nature", and then call everything else "nurture"? Nature nurtures everything by piling up information. It's the only game in town. The concern about drawing boundaries and making distinctions comes from the (magicians' sense) dualists' need to get that boundary up and make themselves distinct from the universe as soon as they possibly can, and we can see from the gross external characteristics of the cloned sheep and cats - not to mention old-fashioned twins - that we've already got around that all the dualists' implications of the distinction just isn't supported by nature as it really works.

The same imaginary boundary causes no end of trouble with environmental pollution too. There is no way that I can pollute your planet without polluting my planet too. (This is just the reverse of the idea that altruism equals selfishness because I can't authentically improve my environment without improving yours as well.) If I draw an imaginary boundary around myself and throw lots of toxic waste outside it, I might fool myself for a while but I can't fool nature. Pretty soon I'll have dozens of allergies, my sperm count will go down and my kids will be radioactive just like yours. It's insane to damage the environment in this way, but people do it because they have a deep seated belief in the reality of the boundary between themselves and the rest of the universe.

The same flawed logic informs people's choice when they drive sports utility vehicles (SUVs) on grounds of safety. Road users start in the real situation where injury to themselves likely means injury to other road users too, and so safety of themselves also means safety of other road users too. That's the standard win - win situation supported by nature. It's when people get caught in the trap of inside out thinking that they come to believe that road safety is a closed world where every winner means there has to be a loser. They draw a boundary round themselves using a big metal box, and figure that their new tank has increased their safety at the expense of others'. Unfortunately it doesn't work that way. The SUV is bigger, taller and so less stable. It is more likely to turn over, and when it does they find that the laws of physics apply just as much inside their metal box as they do outside it. They still have inertia, and sudden decellerations still break their bones.

There is no boundary that seperates us from the rest of the universe. That's an illusion caused by copying everything into an internal thinking space before we start to think about it, and as ever the solution is to let the impressions of the inductive mind always make us aware of the outside context.

The two traps of boredom addiction and the deductive mind acting alone hide each other, and so protect each other from being noticed and addressed. This happens because the deductive mind tends to perceive its present state of awareness as complete, and this fits with the addictive need to stick to repetitive, boring behaviours. What else could there possibly be to do? It also happens because the deductive mind is not the part of our awareness that looks for consistency, and does not spontaneously notice gaps in our understanding. So people who have their inductive mind closed down by boredom addiction tend to string together rather feeble reasons for things, which they have usually heard from other people, and which don't really stand up to careful thought (if they were in the habit of explaining everything to Astronut as described in Chapter 2, this problem would not occur). This fits with the way the end result becomes less important to boredom addicted people than going through the motions, over and over again. Without the inductive mind to oversee what is happening and look for the big picture, understanding is downgraded to knowledge - rote memorised factoids - and the factoids get broken up into unconnected "subjects". The process of fragmenting and rote memorising knowledge without direct understanding in specific labelled learning situations has itself become a social norm, to which there is no conceivable alternative, and this often stops people realising they have learning opportunities in their own life experiences every day.

To an extent, totalitarianism is necessary when the majority of the population is robotised. A robot that has rote memorised an incorrect program is no use at all, so it becomes necessary to ensure that all robots have memorised the same program. Robots that have memorised different facts need to have the facts they have memorised corrected. This does not make totalitarianism desirable. What is desirable is the awakening of everyone's full awareness. The totalitarian enforcing of social standard facts leads to the most terrible situations when robots who cannot imagine any other state of mind encounter people whose inductive minds are starting to awaken. From the robotic perspective there are compliant robots and defective robots, and nothing else. Merely asking questions or noticing inconsistencies is itself perceived as a defect from within an agenda where this is not appropriate behaviour. The socially required picture of reality is always considered to be objective reality in every culture that is trapped in boredom addiction, so in every such culture there are traditions of psychiatric enforcement of "appropriate" behaviours and opinions, and at the same time every such culture condemns the "abuse" of exactly the same practices by its ideological enemies.

People trapped in deductive thinking suffer from addictive motivation for their behaviours which they do not understand, and also suffer from a fragmented perception of the universe around them, which they do not understand. At the same time they experience feelings of great confidence and mastery of their situation because their boredom addiction maintains high dopamine levels just like cocaine addiction, but they are confused, reactive and (as is always the case in social situations where addicts must be motivated) they spend most of their lives experiencing socially induced fear which contradicts their addictively distorted confidence. This is a mess which they might be able to sort out if their ability to think inductively were available, but it is not. So they suppress their anxiety, and suffer from heart attacks, asthma, irritable bowel syndrome and other stress related disorders instead. When they are performing the suppression, the mistake of taking the reality of the deductive mind's internal thinking space as true reality can be combined with the methods of totalitarianism to shift the social focus from solving problems to pretending that they have been solved. It's well understood that particularly during times of extreme robotism, societies stop caring about their own well being, and start to concentrate on concealing evidence of problems. It is only by fully understanding the consequences of being trapped in deductive thinking due to boredom addiction that we can see why a species that seems to have the ability to be rational can engage in "keeping up appearances" to the extent of threatening the survival of whole societies.

The philosopher and psychiatrist R. D. Laing became aware of what he called the "political" context of enforcing social reality. In his book Knots he expressed his awareness that there was some sort of profound logical form to the social disputes within which one person who is more aware is singled out as unwell - deluded or imagining things which are not true - by others who are less aware. If we understand how the deductive mind acting alone turns the universe logically inside out, switching mutually inclusive and exclusive relationships, converting agreements between positions to disagreements between their anti-positions, and shifting focus from the fully consistent picture of all that exists (whether we know about it or not) to the symbols represented in its internal thinking space, we can see why Laing found such elegant forms of entanglement, so like logic puzzles, surrounding the people who were supposed to be his patients. We can also see why he observed a profoundly poetic style of communication such as he described in The Politics and the Bird of Paradise on the part of the ensnared people. Their inductive minds had woken up, they were trying to make sense of the inconsistent stories they were being told by the people around them, and without much practice they were seeking to make intuitive leaps and reach full understanding of what was going on. This is not easy in a culture that doesn't even have a word for setting out to obtain an insight!

Laing based his thinking in a philosophy called existentialism, which helped him understand what was happening to the distressed people he was trying to help. The central idea of existentialism is that everyone sees the universe from their own point of view. It rejects simple, rote memorised ideas, definitions of success or notions of how the world "should" be - but isn't. The existentialists don't think human beings should be thought of as perfect, but instead as the flawed, short-sighted, greedy creatures they often are. They then try to deal with that reality in as sensible a way as they can. (It turns out that many of the unpleasant aspects of human nature aren't actually human nature at all, but simply the behaviour of humans trapped in mind numbing addictive behaviour. As Seay's piece on the advantages of ADHD describes, people immune to boredom addiction and members of societies that are free of the addiction are more generous, imaginative and intelligent than pessimistic descriptions of human nature would allow. Even so, the basic problem within addicted societies remains the same, because there immune people are in the minority.)

Existentialist ideas filtered through the whole of the developed world during the 20th century, and became part of the cultural backdrop that we don't even realise we possess - what the philosopher Thomas Kuhn called the "paradigm". As the developed world became more industrialised, the disagreement between the social reality that everyone was supposed to copy into their internal thinking space and their own direct experience became progressively worse. After millions of people had died marching towards industrial killing machines during World War I it became much harder to look at kings and see wise and benevolent rulers - which was supposed to be objective reality. Existentialism replaced this with the idea of personal reality. The king might be a wise and benevolent ruler in his reality, but in my reality he might be a fossil, in your reality he might be something else, and so on. Existentialism said people couldn't just fall back on simple robotic responses from the social reality any more. They had to look around them and make up their own minds about what was right and wrong on the basis of their own experience. So far the magicans would cheer along, but there's also a problem with existentialism that doesn't match the magical view at all. To understand the problem it's necessary to understand the weakness of the deductive mind acting all alone.

The deductive mind copies everything it wants to think about into its own internal space before it starts pushing the symbols around. The inductive mind works quite differently, floating in a sea of impression and waiting for its surroundings to tell it what they are. A person with both halves of their mind working and integrated knows that they are a part of the universe which is doing things all around them. They can see some of those things, they can logically infer other bits thanks to their deductive mind beavering away under the direction of their inductive mind, and there's plenty they know absolutely nothing about at all. They never confuse the deductive mind's internal thinking space with reality, most of which is a complete mystery to them. Such a person knows that reality exists, but none of us understand it fully and we all have different perspectives on it. Each of us perceives aspects of the totality that others do not see. So from the point of view of people with both halves of their mind working together, the ideas of existentialism are so obvious there's really no need to state them!

A person who only has their deductive mind active easily falls into the trap of believing that their internalised picture of reality is all that there is. When the universe does something that isn't already a part of their internal picture they announce to nature, "That is not possible!" When you think about it that is a very silly thing to do indeed, yet it is so much a part of our culture that for years people confidently told each other that it is not possible for bumble bees to fly - despite having seen bumble bees flying every summer. What they were talking about was a failure of the science of aerodynamics. At that that time aerodynamics could not explain how bumble bees fly, but because they had a deep seated cultural aversion to acknowledging any reality except their pictures, they were obliged to state this fact in a perverse way, as if nature was stupid, had failed to memorise the socially acceptable "facts", and so failed to comply with their internal reality. This is not a trivial point, as we can see when we think about what happened when people in this state got their hands on existentialism. If reality consists of internal pictures and nothing else, and everyone can have their own internal picture, then there is no shared context through which we all communicate or in which we have responsibilities.

People who know they have imperfect knowledge of reality have the opportunity to look more deeply into situations where they disagree, and find the deeper truth that includes all their starting points as special cases. There always is a deeper truth, because they can always "run the fractal" to a deeper level of abstraction. From there, resolution of disputes is often possible. People who do not believe in external reality (or who can't tell the difference between external reality and their own internal picture of it, which amounts to the same thing) have no such opportunity. They just have his reality, her reality, my reality, your reality, and they are all different and they are all disconnected. There is no opportunity or requirement to find resolution or grow deeper understanding. In fact, with no requirement for consistency even in their own picture, they can choose a different reality - make it up differently - whenever they like. From there it's just a short step to an extreme form of antisocial behaviour where people feel that they can do whatever they like in their reality, because their reality is all that matters to them, and other people's realities don't even exist as far as they are concerned. Whenever they don't like the results, they can just change their story.

Although it sounds very peculiar and undesirable when described this way, this kind of existentialism perverted by the deductive mind acting alone is actually the most common personal philosophy found in the developed world at this time. It takes the ideas of authentic existentialism and turns them inside out (in the inside out pattern that we have already seen several times in this chapter). Personal responsibility becomes irresponsibility. The rejection of social definitions of success becomes a short sighted chasing of fashionable trends with no consideration of cost to self or others. As people trapped in their deductive minds adopt a philosophy that leads to complete imprisonment within a socially defined agenda they convince themselves that they have become completely liberated. Cults and schools of counselling or psychotherapy exist for the purpose of liberating people from emotional distress by eradicating their last shreds of conscience and self respect, and teaching this philosophy of existentialism perverted by the deductive mind acting alone. Although it is actually a denial of personal relevance that rationalises a futile pessimism, where the seeking of shallow fashion is all that is possible and even that cannot be experienced fully because history itself changes with the weather, existentialism perverted by the deductive mind acting alone is always presented as an example of the finest intellectual sophistication - probably because of the credibility of authentic existentialists including Laing and the writer Jean Paul Satre. Perhaps the most tragic example of this kind of entrapment is the new fashion of bug chasing, where some gay people seek to become HIV infected in order to reject social norms which stigmatise HIV positive people. It's a response which implicitly accepts the validity of the very closed world that it seeks to reject!

Although it is not generally recognised as such, one of the most damaging effects of existentialism perverted by the deductive mind working alone did not happen in the realms of psychology or social values at all. Instead it happened in quantum mechanics, the physics of the very smallest particles. As the 20th Century began, physicists began to learn that at the smallest scales, nature does not seem to work in the way that we have been used to seeing it behave at our own scale. The important word is "seem", because as mentioned in Chapter 1, Feynman's path integrals work for footballs as much as electrons. If we admit the idea that what we thought we knew already isn't the whole story, we find that to an extent at least, the same rules that apply in quantum mechanics also apply in traditional contexts.

One area where the old rules don't seem to work at all is that at the smallest scales, we always have to talk about probabilities rather than facts. When we throw dice or spin a roulette wheel we usually think we can expect a random result, but that is because there are so many factors we can't accurately predict which number will turn up. If we knew exactly what the physical state of the wheel was when we threw the ball onto it, we could predict which number would turn up. The result is actually completely fixed by the initial conditions, so "extra information" doesn't have to come from anywhere to decide what the result will be. At very small scales this just isn't so. Unlike the roulette wheel, there really isn't anything else that could hide a completely predictable system. If we put a radioactive source near a Geiger counter, no amount of information will help us predict exactly when the next click will occur. This caused a lot of concern amongst physicicts, because all sensible rules of cause and effect seemed to have disappeared. Unfortunately the voice that became dominant in the discussions belonged to Neils Bohr, who was brilliant, cultured - and Danish.

Being Danish is not in itself a problem, but it probably had something to do with his admiration of another great Danish thinker, Soren Kierkegaard, who was the founder of existentialist philosophy. Bohr applied existentialist ideas to the new problem in physics, and concentrated not on what was happening in any objective reality within the experiments, but instead on what the people doing the experiments observed. Bohr said that at a fundamental physical level, there is no reality unless it has been observed. That is, he made the reality of the deductive mind's thinking space and the denial of any objective reality which exists whether or not humans know what it is doing, a fundamental principle of physics! According to Bohr, it is conscious beings observing the universe who bring it into being. Quite how the conscious beings could observe something that they have not yet created by observing it was never clear, nor was it clear just how conscious a creature had to be to have this remarkable faculty of creating reality.

Many of Bohr's great colleauges didn't believe it. Albert Einstein believed there are "hidden variables" which we haven't found yet and which would make things more sensible again. This was why he made his famous comment, "God does not play dice". Erwin Schrodinger was open to the idea that consciousness is important in physics (we met him in Chapter 1, observing that somehow his conscious self was the laws of physics), but even he wasn't happy with Bohr's view. He suggested an experiment where a Geiger counter controlled the release of some poison, so that a cat in the same box would either survive or be killed depending on whether the counter clicked or not. Was he seriously expected to believe that the cat was half dead and half alive until someone opened the box and made an observation? More recently the physicist John S. Bell said this about Bohr's position in his book speakable and unspeakable in quantum mechanics:

"Rather than being disturbed by the ambiguity in principle, by the shiftiness of the division between 'quantum system' and 'classical apparatus', he seemed to take satisfaction in it. He seemed to revel in the contradictions, for example between 'wave' and 'particle', that seem to appear in any attempt to go beyond the pragmatic level. Not to resolve these contradictions and ambiguities, but rather to reconcile us to them, he put forward a philosophy which he called 'complementarity'. He thought that 'complementarity' was important not only for physics but for the whole of human knowledge. The justly immense prestige of Bohr has led to the mention of complementarity in most text books of quantum theory. But usually only in a few lines. One is tempted to suspect that the authors do not understand the Bohr philosophy sufficiently to find it helpful. Einstein himself had great difficulty in reaching a sharp formulation of Bohr's meaning. What hope then for the rest of us? This is very little I can say about 'complementarity'. But I wish to say one thing. It seems to me that Bohr used this word with the reverse of its usual meaning. Consider for example the elephant. From the front she is head, trunk and two legs. From the sides she is otherwise, and from top and bottom different again. These different view are complementary in the usual sense of the word. They supplement one another, and are consistent with one another, and they are all entailed by the unifying concept 'elephant'. It is my impression that to suppose Bohr used the word 'complementary' in this ordinary way would have been regarded by him as missing his point and trivialising his thought. He seems to insist rather that we must use in our analysis elements which contradict one another, which do not add up to, or derive from, a whole. By 'complementarity' he meant, it seems to me, the reverse: contradictoriness. Bohr seemed to like aphorisms such as: 'the opposite of a deep truth is also a deep truth': 'truth and clarity are complementary'. Perhaps he took a subtle satisfaction in the use of a familiar word with the reverse of its familiar meaning."

Bell here picks up on some problems which we can recognise as characteristic of the deductive mind acting alone. The idea that there is no self-consistent universe that knows what it is doing, no matter how great our ignorance is. Complacency in the face of contradictions instead of looking for the deeper truth that can sort them out. The strange way that meanings and relationships turn inside out and become the exact opposite of what they really are. Perhaps it is no accident that the example Bell uses of the different ways that an elephant appears when examined from different points of view comes from a teaching story first told by the Sufi poet Jallaludin Rumi, where three blind men all feel different parts of an elephant. One feels a tree (a leg), one feels a wall (the body) and one feels a rope (the trunk). The three men must find the deep truth that unifies their seemingly contradictory experiences of the elephant.

Bohr's use of existentialism perverted by the deductive mind acting alone has served as a barrier to understanding for nearly a hundred years, because it teaches that no deeper understanding is possible. Exactly as it does in other walks of life, this kind of thinking becomes a philosophy of futility and despair which is not justified by evidence found in the universe around us. So even in the most elevated intellectual circles, where evidence provided by experiments performed in the mysterious and wonderful real universe all around us informs the discussions, the trap of the deductive mind acting alone can sneak up, close the human mind, make it believe it contains all of reality, and stop further growth and understanding.

To escape from this trap is easy. We simply have to bear in mind that what we know is only ever a small fraction of what the universe that we are a part of is doing. That reality exists and is completely consistent within itself. We can trust the universe to make sense, far more than we can trust our own assumptions and erroneous ideas. When we do this, our understanding improves and we grow. We evolved our deductive mind in order to exploit the consistency and make sense of the moving situations we see all around us. All we have to do is remember that we must always use it in the context of our impressions, and allow a spirit of questioning and exploration to guide us.

| [Last] | [Contents] | [Next] |

Copyright Alan G. Carter 2003.

Disclaimer - Copyright - Contact

Online: buildfreedom.org | terrorcrat.com / terroristbureaucrat.com